CIA 2010 covert communication websites Expired domain trackers Updated 2025-07-16

When you Google most of the hit domains, many of them show up on "expired domain trackers", and above all Chinese expired domain trackers for some reason, notably e.g.:This suggests that scraping these lists might be a good starting point to obtaining "all expired domains ever".

- hupo.com: e.g. static.hupo.com/expdomain_myadmin/2012-03-06(国际域名).txt. Heavily IP throttled. Tor hindered more than helped.Scraping script: ../cia-2010-covert-communication-websites/hupo.sh. Scraping does about 1 day every 5 minutes relatively reliably, so about 36 hours / year. Not bad.Results are stored under

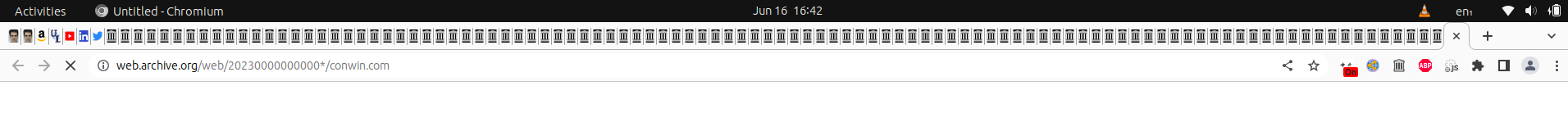

tmp/humo/<day>.Check for hit overlap:The hits are very well distributed amongst days and months, at least they did a good job hiding these potential timing fingerprints. This feels very deliberately designed.grep -Fx -f <( jq -r '.[].host' ../media/cia-2010-covert-communication-websites/hits.json ) cia-2010-covert-communication-websites/tmp/hupo/*There are lots of hits. The data set is very inclusive. Also we understand that it must have been obtains through means other than Web crawling, since it contains so many of the hits.Some of their files are simply missing however unfortunately, e.g. neither of the following exist:webmasterhome.cn did contain that one however: domain.webmasterhome.cn/com/2012-07-01.asp. Hmm. we might have better luck over there then?2018-11-19 is corrupt in a new and wonderful way, with a bunch of trailing zeros:ends in:wget -O hupo-2018-11-19 'http://static.hupo.com/expdomain_myadmin/2018-11-19%EF%BC%88%E5%9B%BD%E9%99%85%E5%9F%9F%E5%90%8D%EF%BC%89.txt hd hupo-2018-11-19000ffff0 74 75 64 69 65 73 2e 63 6f 6d 0d 0a 70 31 63 6f |tudies.com..p1co| 00100000 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 0018a5e0 00 00 00 00 00 00 00 00 00 |.........|More generally, several files contain invalid domain names with non-ASCII characters, e.g. 2013-01-02 contains365<D3>л<FA><C2><CC>.com. Domain names can only contain ASCII charters: stackoverflow.com/questions/1133424/what-are-the-valid-characters-that-can-show-up-in-a-url-host Maybe we should get rid of any such lines as noise.Some files around 2011-09-06 start with an empty line. 2014-01-15 starts with about twenty empty lines. Oh and that last one also has some trash bytes the end<B7><B5><BB><D8>. Beauty. - webmasterhome.cn: e.g. domain.webmasterhome.cn/com/2012-03-06.asp. Appears to contain the exact same data as "static.hupo.com"Also has some randomly missing dates like hupo.com, though different missing ones from hupo, so they complement each other nicely.Some of the URLs are broken and don't inform that with HTTP status code, they just replace the results with some Chinese text 无法找到该页 (The requested page could not be found):Several URLs just return length 0 content, e.g.:It is not fully clear if this is a throttling mechanism, or if the data is just missing entirely.

curl -vvv http://domain.webmasterhome.cn/com/2015-10-31.asp * Trying 125.90.93.11:80... * Connected to domain.webmasterhome.cn (125.90.93.11) port 80 (#0) > GET /com/2015-10-31.asp HTTP/1.1 > Host: domain.webmasterhome.cn > User-Agent: curl/7.88.1 > Accept: */* > < HTTP/1.1 200 OK < Date: Sat, 21 Oct 2023 15:12:23 GMT < Server: Microsoft-IIS/6.0 < X-Powered-By: ASP.NET < Content-Length: 0 < Content-Type: text/html < Set-Cookie: ASPSESSIONIDCSTTTBAD=BGGPAONBOFKMMFIPMOGGHLMJ; path=/ < Cache-control: private < * Connection #0 to host domain.webmasterhome.cn left intactStarting around 2018, the IP limiting became very intense, 30 mins / 1 hour per URL, so we just gave up. Therefore, data from 2018 onwards does not contain webmasterhome.cn data.Starting from2013-05-10the format changes randomly. This also shows us that they just have all the HTML pages as static files on their server. E.g. with:we see:grep -a '<pre' * | s2013-05-09:<pre style='font-family:Verdana, Arial, Helvetica, sans-serif; '><strong>2013<C4><EA>05<D4><C2>09<C8>յ<BD><C6>ڹ<FA><BC><CA><D3><F2><C3><FB></strong><br>0-3y.com 2013-05-10:<pre><strong>2013<C4><EA>05<D4><C2>10<C8>յ<BD><C6>ڹ<FA><BC><CA><D3><F2><C3><FB></strong> - justdropped.com: e.g. www.justdropped.com/drops/010112com.html. First known working day:

2006-01-01. Unthrottled. - yoid.com: e.g.: yoid.com/bydate.php?d=2016-06-03&a=a. First known workding day:

2016-06-01.

Data comparison:

- 2012-01-01Looking only at the

.com:The lists are quite similar however.- webmastercn has just about ten extra ones than justdropped, the rest is exactly the same

- justdropped has some extra and some missing from hupo

We've made the following pipelines for hupo.com + webmasterhome.cn merging:

./hupo.sh &

./webmastercn.sh &

./justdropped.sh &

wait

./justdropped-post.sh

./hupo-merge.sh

# Export as small Google indexable files in a Git repository.

./hupo-repo.sh

# Export as per year zips for Internet Archive.

./hupo-zip.sh

# Obtain count statistics:

./hupo-wc.shCount unique domains in the repos:

( echo */*/*/* | xargs cat ) | sort -u | wcThe extracted data is present at:Soon after uploading, these repos started getting some interesting traffic, presumably started by security trackers going "bling bling" on certain malicious domain names in their databases:

- archive.org/details/expired-domain-names-by-day

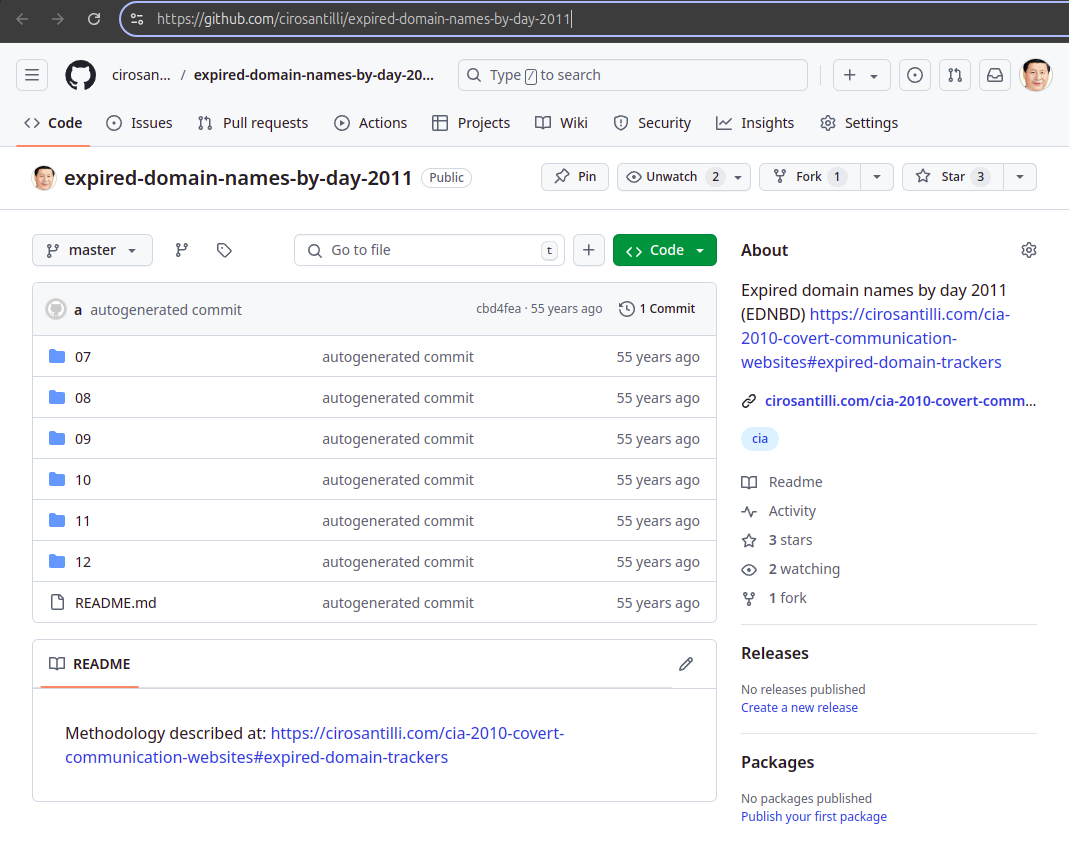

- github.com/cirosantilli/expired-domain-names-by-day-* repos:

- github.com/cirosantilli/expired-domain-names-by-day-2006

- github.com/cirosantilli/expired-domain-names-by-day-2007

- github.com/cirosantilli/expired-domain-names-by-day-2008

- github.com/cirosantilli/expired-domain-names-by-day-2009

- github.com/cirosantilli/expired-domain-names-by-day-2010

- github.com/cirosantilli/expired-domain-names-by-day-2011 (~11M)

- github.com/cirosantilli/expired-domain-names-by-day-2012 (~18M)

- github.com/cirosantilli/expired-domain-names-by-day-2013 (~28M)

- github.com/cirosantilli/expired-domain-names-by-day-2014 (~29M)

- github.com/cirosantilli/expired-domain-names-by-day-2015 (~28M)

- github.com/cirosantilli/expired-domain-names-by-day-2016

- github.com/cirosantilli/expired-domain-names-by-day-2017

- github.com/cirosantilli/expired-domain-names-by-day-2018

- github.com/cirosantilli/expired-domain-names-by-day-2019

- github.com/cirosantilli/expired-domain-names-by-day-2020

- github.com/cirosantilli/expired-domain-names-by-day-2021

- github.com/cirosantilli/expired-domain-names-by-day-2022

- github.com/cirosantilli/expired-domain-names-by-day-2023

- github.com/cirosantilli/expired-domain-names-by-day-2024

- GitHub trackers:

- admin-monitor.shiyue.com

- anquan.didichuxing.com

- app.cloudsek.com

- app.flare.io

- app.rainforest.tech

- app.shadowmap.com

- bo.serenety.xmco.fr 8 1

- bts.linecorp.com

- burn2give.vercel.app

- cbs.ctm360.com 17 2

- code6.d1m.cn

- code6-ops.juzifenqi.com

- codefend.devops.cndatacom.com

- dlp-code.airudder.com

- easm.atrust.sangfor.com

- ec2-34-248-93-242.eu-west-1.compute.amazonaws.com

- ecall.beygoo.me 2 1

- eos.vip.vip.com 1 1

- foradar.baimaohui.net 2 1

- fty.beygoo.me

- hive.telefonica.com.br 2 1

- hulrud.tistory.com

- kartos.enthec.com

- soc.futuoa.com

- lullar-com-3.appspot.com

- penetration.houtai.io 2 1

- platform.sec.corp.qihoo.net

- plus.k8s.onemt.co 4 1

- pmp.beygoo.me 2 1

- portal.protectorg.com

- qa-boss.amh-group.com

- saicmotor.saas.cubesec.cn

- scan.huoban.com

- sec.welab-inc.com

- security.ctrip.com 10 3

- siem-gs.int.black-unique.com 2 1

- soc-github.daojia-inc.com

- spigotmc.org 2 1

- tcallzgroup.blueliv.com

- tcthreatcompass05.blueliv.com 4 1

- tix.testsite.woa.com 2 1

- toucan.belcy.com 1 1

- turbo.gwmdevops.com 18 2

- urlscan.watcherlab.com

- zelenka.guru. Looks like a Russian hacker forum.

- LinkedIn profile views:

- "Information Security Specialist at Forcepoint"

Check for overlap of the merge:

grep -Fx -f <( jq -r '.[].host' ../media/cia-2010-covert-communication-websites/hits.json ) cia-2010-covert-communication-websites/tmp/merge/*Next, we can start searching by keyword with Wayback Machine CDX scanning with Tor parallelization with out helper ../cia-2010-covert-communication-websites/hupo-cdx-tor.sh, e.g. to check domains that contain the term "news":produces per-year results for the regex term OK lets:

./hupo-cdx-tor.sh mydir 'news|global' 2011 2019news|global between the years under:tmp/hupo-cdx-tor/mydir/2011

tmp/hupo-cdx-tor/mydir/2012./hupo-cdx-tor.sh out 'news|headline|internationali|mondo|mundo|mondi|iran|today'Other searches that are not dense enough for our patience:

world|global|[^.]infoOMG and a few more. It's amazing.

news search might be producing some golden, golden new hits!!! Going full into this. Hits:- thepyramidnews.com

- echessnews.com

- tickettonews.com

- airuafricanews.com

- vuvuzelanews.com

- dayenews.com

- newsupdatesite.com

- arabicnewsonline.com

- arabicnewsunfiltered.com

- newsandsportscentral.com

- networkofnews.com

- trekkingtoday.com

- financial-crisis-news.com

CIA 2010 covert communication websites Overview of Ciro Santilli's investigation Created 2025-05-07 Updated 2025-07-19

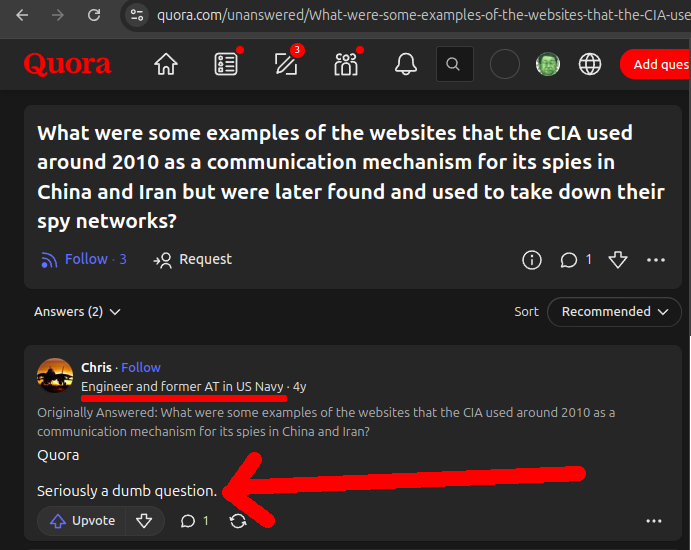

Ciro Santilli hard heard about the 2018 Yahoo article around 2020 while studying for his China campaign because the websites had been used to take down the Chinese CIA network in China. He even asked on Quora about it, but there were no publicly known domains at the time to serve as a starting point. Chris, Electrical Engineer and former Avionics Tech in the US Navy, even replied suggesting that obviously the CIA is so competent that it would never ever have its sites leaked like that:

Seriously a dumb question.

In 2023, one year after the Reuters article had been published, Ciro Santilli was killing some time on YouTube when he saw a curious video: Video 1. "Compromised Comms by Darknet Diaries (2023)". As soon as he understood what it was about and that it was likely related to the previously undisclosed websites that he was interested in, he went on to read the Reuters article that the podcast pointed him to.

Being a half-arsed web developer himself, Ciro knows that the attack surface of a website is about the size of Texas, and the potential for fingerprinting is off the charts with so many bits and pieces sticking out. And given that there were at least 885 of them, surely we should be able to find a few more than nine, right?

In particular, it is fun how these websites provide to anyone "live" examples of the USA spying on its own allies in the form of Wayback Machine archives.

Given all of this, Ciro knew he had to try and find some of the domains himself using the newly available information! It was an irresistible real-life capture the flag.

Chris, get fucked.

It was the YouTube suggestion for this video that made Ciro Santilli aware of the Reuters article almost one year after its publication, which kickstarted his research on the topic.

Full podcast transcript: darknetdiaries.com/transcript/75/

Ciro Santilli pinged the Podcast's host Jack Rhysider on Twitter and he ACK'ed which is cool, though he was skeptical about the strength of the fingerprints found, and didn't reply when clarification was offered. Perhaps the material is just not impactful enough for him to produce any new content based on it. Or also perhaps it comes too close to sources and methods for his own good as a presumably American citizen.

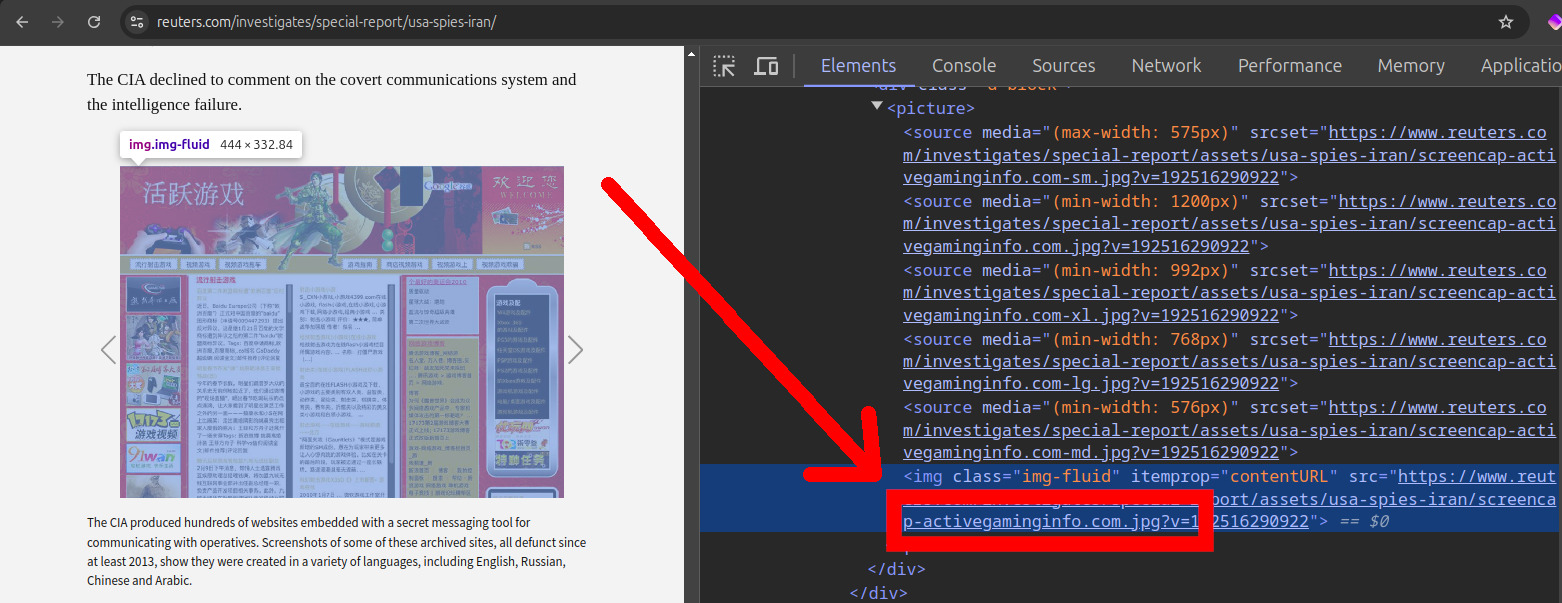

The first step was to try and obtain the domain names of all nine websites that Reuters had highlighted as they had only given two domains explicitly.

Thankfully however, either by carelessness or intentionally, this was easy to do by inspecting the address of the screenshots provided. For example, one of the URLs was:which corresponds to

https://www.reuters.com/investigates/special-report/assets/usa-spies-iran/screencap-activegaminginfo.com.jpg?v=192516290922activegaminginfo.com.Inspecting the Reuters article HTML source code

. Source. The Reuters article only gave one URL explicitly: iraniangoals.com. But most others could be found by inspecting the HTML of the screenshots provided, except for the Carson website.Once we had this, we were then able to inspect the websites on the Wayback Machine to better understand possible fingerprints such as their communication mechanism.

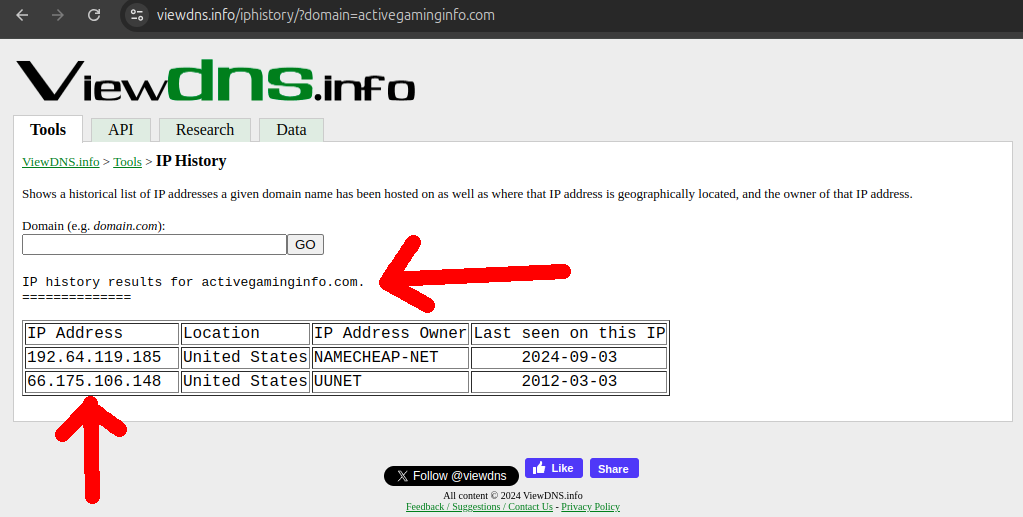

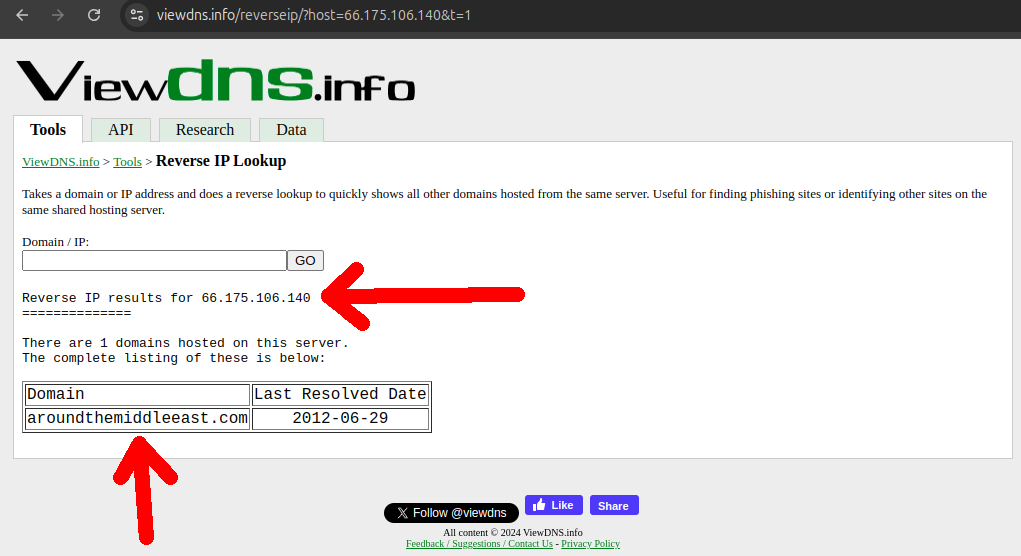

The next step was to use our knowledge of the sequential IP flaw to look for more neighbor websites to the nine we knew of.

This was not so easy to do because the websites are down and so it requires historical data. But for our luck we found viewdns.info which allowed for 200 free historical queries (and they seem to have since removed this hard limit and moved to only throttling), leading to the discovery or some or our own new domains!

This gave us a larger website sample size in the order of the tens, which allowed us to better grasp more of the possible different styles of website and have a much better idea of what a good fingerprint would look like.

The next major and difficult step would be to find new IP ranges.

This was and still is a hacky heuristic process for us, but we've had the most success with the following methods:

- step 1) get huge lists of historic domain names. The two most valuable sources so far have been:

- step 2) filter the domain lists down somehow to a more manageable number of domains. The most successful heuristics have been:

- for 2013 DNS Census which has IPs, check that they are the only domain in a given IP, which was the case for the majority of CIA websites, but was already not so common for legitimate websites

- they have the word

newson the domain name, given that so many of the websites were fake news aggregators

- step 3) search on Wayback machine if any of those filtered domains contain URL's that could be those of a communication mechanism. In particular, we've used a small army of Tor bots to overcome the Wayback Machine's IP throttling and greatly increase our checking capacity

DNS Census 2013 website

. Source. This source provided valuable historical domain to IP data. It was likely extracted with an illegal botnet. Data excerpt from the CSVs:amazon.com,2012-02-01T21:33:36,72.21.194.1

amazon.com,2012-02-01T21:33:36,72.21.211.176

amazon.com,2013-10-02T19:03:39,72.21.194.212

amazon.com,2013-10-02T19:03:39,72.21.215.232

amazon.com.au,2012-02-10T08:03:38,207.171.166.22

amazon.com.au,2012-02-10T08:03:38,72.21.206.80

google.com,2012-01-28T05:33:40,74.125.159.103

google.com,2012-01-28T05:33:40,74.125.159.104

google.com,2013-10-02T19:02:35,74.125.239.41

google.com,2013-10-02T19:02:35,74.125.239.46The four communication mechanisms used by the CIA websites

. Java Applets, Adobe Flash, JavaScript and HTTPSExpired domain names by day 2011

. Source. The scraping of expired domain trackers to Github was one of the positive outcomes of this project.