This was the Holy Grail as of 2023, when text-to-image started to really take off, but text-to-video was miles behind.

- medium.com/@chain.info1/the-mystery-behind-satoshi-tribute-donations-cf4ce28c56a1 The Mystery Behind "Satoshi Tribute" Donations by Chain.Info (2020)

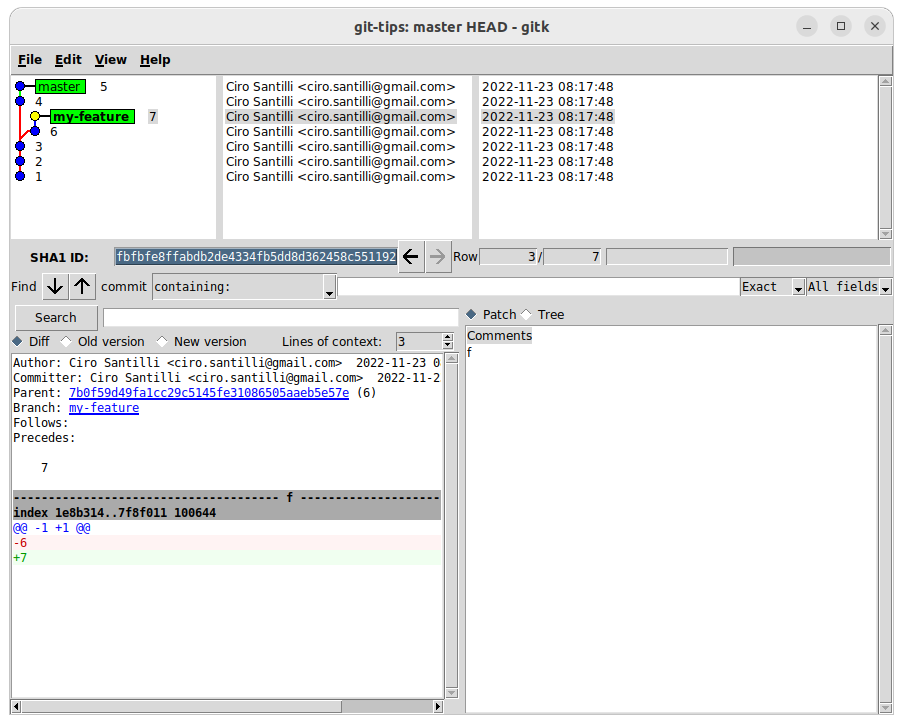

This is the most important thing to understand Git!

You must:

- be able to visualize the commit tree

- understand how each git command modifies the commit DAG

But not every directed acyclic graph is a tree.

Example of a tree (and therefore also a DAG):Convention in this presentation: arrows implicitly point up, just like in a

5

|

4 7

| |

3 6

|/

2

|

1git log, i.e.:and so on.Some people like merges, but they are ugly and stupid. Rebase instead and keep linear history.

Linear history:

5 master

|

4

|

3

|

2

|

1 first commitBranched history:

7 master

|\

| \

6 \

|\ \

| | |

3 4 5

| | |

| / /

|/ /

2 /

| /

1/ first commitWhich type of tree do you think will be easier to understand and maintain?

????

????????????

You may disconnect now if you still like branched history.

Generate a minimal test repo. You should get in the habit of doing this to test stuff out.

#!/usr/bin/env bash

mkdir git-tips

cd git-tips

git init

for i in 1 2 3 4 5; do

echo $i > f

git add f

git commit -m $i

done

git checkout HEAD~2

git checkout -b my-feature

for i in 6 7; do

echo $i > f

git add f

git commit -m $i

doneOh but there are usually 2 trees: local and remote.

So you also have to learn how to observe and modify and sync with the remote tree!

But basically:to update the remote tree. And then you can use it exactly like any other branch, except you prefix them with the remote (usually

git fetchorigin/*), e.g.:origin/masteris the latest fetch of the remote version ofmasterorigin/my-featureis the latest fetch of the remote version ofmy-feature

Non-POSIX only here.

The best open source implementation as of 2020 seems to be: Mozilla rr.

Unlisted articles are being shown, click here to show only listed articles.