activatedgeek/LeNet-5 Updated 2025-07-16

It trains the LeNet-5 neural network on the MNIST dataset from scratch, and afterwards you can give it newly hand-written digits 0 to 9 and it will hopefully recognize the digit for you.

Ciro Santilli created a small fork of this repo at lenet adding better automation for:

- extracting MNIST images as PNG

- ONNX CLI inference taking any image files as input

- a Python

tkinterGUI that lets you draw and see inference live - running on GPU

Install on Ubuntu 24.10 with:We use our own

sudo apt install protobuf-compiler

git clone https://github.com/activatedgeek/LeNet-5

cd LeNet-5

git checkout 95b55a838f9d90536fd3b303cede12cf8b5da47f

virtualenv -p python3 .venv

. .venv/bin/activate

pip install \

Pillow==6.2.0 \

numpy==1.24.2 \

onnx==1.13.1 \

torch==2.0.0 \

torchvision==0.15.1 \

visdom==0.2.4 \

;pip install because their requirements.txt uses >= instead of == making it random if things will work or not.On Ubuntu 22.10 it was instead:

pip install

Pillow==6.2.0 \

numpy==1.26.4 \

onnx==1.17.0 torch==2.6.0 \

torchvision==0.21.0 \

visdom==0.2.4 \

;Then run with:This script:

python run.pyIt throws a billion exceptions because we didn't start the Visdom server, but everything works nevertheless, we just don't get a visualization of the training.

The terminal outputs lines such as:

Train - Epoch 1, Batch: 0, Loss: 2.311587

Train - Epoch 1, Batch: 10, Loss: 2.067062

Train - Epoch 1, Batch: 20, Loss: 0.959845

...

Train - Epoch 1, Batch: 230, Loss: 0.071796

Test Avg. Loss: 0.000112, Accuracy: 0.967500

...

Train - Epoch 15, Batch: 230, Loss: 0.010040

Test Avg. Loss: 0.000038, Accuracy: 0.989300One of the benefits of the ONNX output is that we can nicely visualize the neural network on Netron:

Netron visualization of the activatedgeek/LeNet-5 ONNX output

. From this we can see the bifurcation on the computational graph as done in the code at:output = self.c1(img)

x = self.c2_1(output)

output = self.c2_2(output)

output += x

output = self.c3(output) Ciro Santilli's hardware Lenovo ThinkPad P51 (2017) log Updated 2025-07-16

- battery life:

- 2023-04: on-browser streaming + light browsing on Ubuntu 22.10: about 2h45. Too low! Gotta try buying a new battery.

- 2022-01-04 updated firmward after noticing that ubuntu 21.10 does not wake up from suspend seemed to happen every time when not connected to external power.

dmidecodediff excerpt:used the "Ubuntu Software" GUI as mentioned at: support.lenovo.com/gb/en/solutions/ht510810-how-to-do-software-updates-linux. Kudos for making this accessible to newbs.BIOS Information Vendor: LENOVO - Version: N1UET40W (1.14 ) - Release Date: 09/28/2017 + Version: N1UET71W (1.45 ) + Release Date: 07/18/2018After doing that, another update became available to: 0.1.56, clicked it and was much faster than the previous one, and didn't auto reboot. After manual reboot,dmidecodediffed again:plus a bunch of other lines.BIOS Information Vendor: LENOVO - Version: N1UET71W (1.45 ) - Release Date: 07/18/2018 + Version: N1UET82W (1.56 ) + Release Date: 08/12/2021 - 2021-06-05 upgraded to Ubuntu 21.04 with a clean install from an ISO. SelectedAfter this, the GUI felt fast, who would have thought that erasing a bunch of stuff would make the system faster!

- "Minimal installation"

- "Erase disk and install Ubuntu". Notably, this erased the Microsoft Windows that came with the computer and was never used not even once

- "Erase disk ans use ZFS"

- Encrypt the new Ubuntu installation for security

lsblkcontains:andzd0 230:0 0 500M 0 disk └─keystore-rpool 253:0 0 484M 0 crypt /run/keystore/rpool nvme0n1 259:0 0 476.9G 0 disk ├─nvme0n1p1 259:1 0 512M 0 part /boot/efi ├─nvme0n1p2 259:2 0 2G 0 part │ └─cryptoswap 253:1 0 2G 0 crypt ├─nvme0n1p3 259:3 0 2G 0 part └─nvme0n1p4 259:4 0 472.4G 0 partlsblk -f:zd0 crypto_LUKS 2 └─keystore-rpool ext4 1.0 keystore-rpool nvme0n1 ├─nvme0n1p1 vfat FAT32 ├─nvme0n1p2 crypto_LUKS 2 │ └─cryptoswap ├─nvme0n1p3 zfs_member 5000 bpool └─nvme0n1p4 zfs_member 5000 rpooThen:contains:grep '[rb]pool' /proc/mountswhich gives an idea of how the above map to mountpoints.rpool/ROOT/ubuntu_uvs1fq / zfs rw,relatime,xattr,posixacl 0 0 rpool/USERDATA/ciro_czngbg /home/ciro zfs rw,relatime,xattr,posixacl 0 0 rpool/USERDATA/root_czngbg /root zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/srv /srv zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/usr/local /usr/local zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/games /var/games zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/log /var/log zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/lib /var/lib zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/mail /var/mail zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/snap /var/snap zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/www /var/www zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/spool /var/spool zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/lib/AccountsService /var/lib/AccountsService zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/lib/NetworkManager /var/lib/NetworkManager zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/lib/apt /var/lib/apt zfs rw,relatime,xattr,posixacl 0 0 rpool/ROOT/ubuntu_uvs1fq/var/lib/dpkg /var/lib/dpkg zfs rw,relatime,xattr,posixacl 0 0 bpool/BOOT/ubuntu_uvs1fq /boot zfs rw,nodev,relatime,xattr,posixacl 0 0

2020-06-06: dropped some lemon juice on the bottom left of touchpad. Bottom left button not working anymore... I'm an idiot. There are many other alternatives, but very aggravating, I'll replace it for sure. Can't find the exact replacement part or any videos showing its replacement online easliy, dang. For the T430: www.youtube.com/watch?v=F3lzV9uXRjU Asked at: forums.lenovo.com/t5/ThinkPad-P-and-W-Series-Mobile-Workstations/P51-left-bottom-button-below-trackpad-mouse-left-click-stopped-working-possible-to-replace/m-p/5019903 Also I could not access it because you need to remove the HDD first: www.youtube.com/watch?v=5Klawxc7T_Y and I can't pull it out even with considerable force, unlike in the video... And OMG, those button caps are impossible to re-install once removed!!! Then when I put the whole thing back together, the upper buttons were not working anymore. FUUUUUUUUCK. When first opening I pulled on it without properly removing the cap and it came off, but it didn't look broken in any way and I put it back in. Keyboard works thank God, so right black connector is fine, left white one oppears to be the one for upper keys and trackpoint, both of which stopped working. The hardware manual confirms that they are both part of the same device, so basically a mouse :-) TODO can it be bought separately from te keyboard? Doesn't look like it, photo of keyboard part includes those buttons. The manual also confirms that the bottom buttons are one device with the trackpad "trackpad with buttons", thus forming the second entire mouse.

2019-04-17: popup asking about "ThinkPad P51 Management Engine Update" from from 182.29.3287 to 184.60.3561, said yes.

- partition setup: askubuntu.com/questions/343268/how-to-use-manual-partitioning-during-installation/976430#976430

- BIOS:

- for NVIDIA driver:

- for KVM, required by Android Emulator: enable virtualization extensions

- TODO fix the brightness keys:

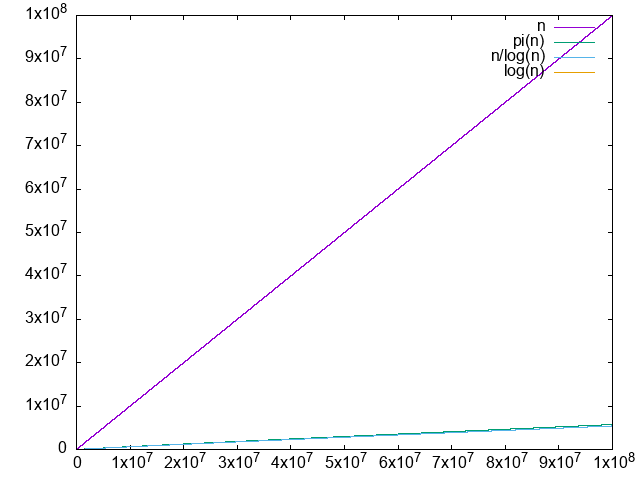

prime-number-theorem Updated 2025-07-16

Consider this is a study in failed computational number theory.

The approximation converges really slowly, and we can't easy go far enough to see that the ration converges to 1 with only awk and primes:Runs in 30 minutes tested on Ubuntu 22.10 and P51, producing:

sudo apt intsall bsdgames

cd prime-number-theorem

./main.py 100000000. It is clear that the difference diverges, albeit very slowly.

. We just don't have enough points to clearly see that it is converging to 1.0, the convergence truly is very slow. The logarithm integral approximation is much much better, but we can't calculate it in awk, sadface.

But looking at: en.wikipedia.org/wiki/File:Prime_number_theorem_ratio_convergence.svg we see that it takes way longer to get closer to 1, even at it is still not super close. Inspecting the code there we see:so OK, it is not something doable on a personal computer just like that.

(* Supplement with larger known PrimePi values that are too large for \

Mathematica to compute *)

LargePiPrime = {{10^13, 346065536839}, {10^14, 3204941750802}, {10^15,

29844570422669}, {10^16, 279238341033925}, {10^17,

2623557157654233}, {10^18, 24739954287740860}, {10^19,

234057667276344607}, {10^20, 2220819602560918840}, {10^21,

21127269486018731928}, {10^22, 201467286689315906290}, {10^23,

1925320391606803968923}, {10^24, 18435599767349200867866}}; Go UI Updated 2025-07-16

Hide top bar on Ubuntu Updated 2025-07-16

Or likely more generally, on GNOME desktop, which is the default desktop environment as of Ubuntu 22.10.

LLVM IR hello world Updated 2025-07-16

Example: llvm/hello.ll adapted from: llvm.org/docs/LangRef.html#module-structure but without double newline.

To execute it as mentioned at github.com/dfellis/llvm-hello-world we can either use their crazy assembly interpreter, tested on Ubuntu 22.10:This seems to use

sudo apt install llvm-runtime

lli hello.llputs from the C standard library.Or we can Lower it to assembly of the local machine:which produces:and then we can assemble link and run with gcc:or with clang:

sudo apt install llvm

llc hello.llhello.sgcc -o hello.out hello.s -no-pie

./hello.outclang -o hello.out hello.s -no-pie

./hello.outhello.s uses the GNU GAS format, which clang is highly compatible with, so both should work in general. Matter.js Updated 2025-07-16

To run the demos locally, tested on Ubuntu 22.10:and this opens up the demos on the browser.

git clone https://github.com/liabru/matter-js

cd matter-js

git checkout 0.19.0

npm install

npm run dev MLperf v2.1 ResNet Updated 2025-07-16

Instructions at:

Ubuntu 22.10 setup with tiny dummy manually generated ImageNet and run on ONNX:

sudo apt install pybind11-dev

git clone https://github.com/mlcommons/inference

cd inference

git checkout v2.1

virtualenv -p python3 .venv

. .venv/bin/activate

pip install numpy==1.24.2 pycocotools==2.0.6 onnxruntime==1.14.1 opencv-python==4.7.0.72 torch==1.13.1

cd loadgen

CFLAGS="-std=c++14" python setup.py develop

cd -

cd vision/classification_and_detection

python setup.py develop

wget -q https://zenodo.org/record/3157894/files/mobilenet_v1_1.0_224.onnx

export MODEL_DIR="$(pwd)"

export EXTRA_OPS='--time 10 --max-latency 0.2'

tools/make_fake_imagenet.sh

DATA_DIR="$(pwd)/fake_imagenet" ./run_local.sh onnxruntime mobilenet cpu --accuracyLast line of output on P51, which appears to contain the benchmark resultswhere presumably

TestScenario.SingleStream qps=58.85, mean=0.0138, time=0.136, acc=62.500%, queries=8, tiles=50.0:0.0129,80.0:0.0137,90.0:0.0155,95.0:0.0171,99.0:0.0184,99.9:0.0187qps means queries per second, and is the main results we are interested in, the more the better.Running:produces a tiny ImageNet subset with 8 images under

tools/make_fake_imagenet.shfake_imagenet/.fake_imagenet/val_map.txt contains:val/800px-Porsche_991_silver_IAA.jpg 817

val/512px-Cacatua_moluccensis_-Cincinnati_Zoo-8a.jpg 89

val/800px-Sardinian_Warbler.jpg 13

val/800px-7weeks_old.JPG 207

val/800px-20180630_Tesla_Model_S_70D_2015_midnight_blue_left_front.jpg 817

val/800px-Welsh_Springer_Spaniel.jpg 156

val/800px-Jammlich_crop.jpg 233

val/782px-Pumiforme.JPG 285TODO prepare and test on the actual ImageNet validation set, README says:

Prepare the imagenet dataset to come.

Since that one is undocumented, let's try the COCO dataset instead, which uses COCO 2017 and is also a bit smaller. Note that his is not part of MLperf anymore since v2.1, only ImageNet and open images are used. But still:

wget https://zenodo.org/record/4735652/files/ssd_mobilenet_v1_coco_2018_01_28.onnx

DATA_DIR_BASE=/mnt/data/coco

export DATA_DIR="${DATADIR_BASE}/val2017-300"

mkdir -p "$DATA_DIR_BASE"

cd "$DATA_DIR_BASE"

wget http://images.cocodataset.org/zips/val2017.zip

wget http://images.cocodataset.org/annotations/annotations_trainval2017.zip

unzip val2017.zip

unzip annotations_trainval2017.zip

mv annotations val2017

cd -

cd "$(git-toplevel)"

python tools/upscale_coco/upscale_coco.py --inputs "$DATA_DIR_BASE" --outputs "$DATA_DIR" --size 300 300 --format png

cd -Now:fails immediately with:The more plausible looking:first takes a while to preprocess something most likely, which it does only one, and then fails:

./run_local.sh onnxruntime mobilenet cpu --accuracyNo such file or directory: '/path/to/coco/val2017-300/val_map.txt./run_local.sh onnxruntime mobilenet cpu --accuracy --dataset coco-300Traceback (most recent call last):

File "/home/ciro/git/inference/vision/classification_and_detection/python/main.py", line 596, in <module>

main()

File "/home/ciro/git/inference/vision/classification_and_detection/python/main.py", line 468, in main

ds = wanted_dataset(data_path=args.dataset_path,

File "/home/ciro/git/inference/vision/classification_and_detection/python/coco.py", line 115, in __init__

self.label_list = np.array(self.label_list)

ValueError: setting an array element with a sequence. The requested array has an inhomogeneous shape after 2 dimensions. The detected shape was (5000, 2) + inhomogeneous part.TODO!

Stockfish CLI Updated 2025-10-14

Most of what follows is part of the Universal Chess Interface. Tested on Ubuntu 22.10, Stockfish 14.1.

After starting Sweet ASCII art. where:

stockfish on the command line, d (presumably display) contains: +---+---+---+---+---+---+---+---+

| r | n | b | q | k | b | n | r | 8

+---+---+---+---+---+---+---+---+

| p | p | p | p | p | p | p | p | 7

+---+---+---+---+---+---+---+---+

| | | | | | | | | 6

+---+---+---+---+---+---+---+---+

| | | | | | | | | 5

+---+---+---+---+---+---+---+---+

| | | | | | | | | 4

+---+---+---+---+---+---+---+---+

| | | | | | | | | 3

+---+---+---+---+---+---+---+---+

| P | P | P | P | P | P | P | P | 2

+---+---+---+---+---+---+---+---+

| R | N | B | Q | K | B | N | R | 1

+---+---+---+---+---+---+---+---+

a b c d e f g h

Fen: rnbqkbnr/pppppppp/8/8/8/8/PPPPPPPP/RNBQKBNR w KQkq - 0 1

Key: 8F8F01D4562F59FBFen: FEN notationKey: TODO

Move white king's pawn from e2 to e4:Then display again:gives:so we see that the pawn moved.

position startpos moves e2e4d +---+---+---+---+---+---+---+---+

| r | n | b | q | k | b | n | r | 8

+---+---+---+---+---+---+---+---+

| p | p | p | p | p | p | p | p | 7

+---+---+---+---+---+---+---+---+

| | | | | | | | | 6

+---+---+---+---+---+---+---+---+

| | | | | | | | | 5

+---+---+---+---+---+---+---+---+

| | | | | P | | | | 4

+---+---+---+---+---+---+---+---+

| | | | | | | | | 3

+---+---+---+---+---+---+---+---+

| P | P | P | P | | P | P | P | 2

+---+---+---+---+---+---+---+---+

| R | N | B | Q | K | B | N | R | 1

+---+---+---+---+---+---+---+---+

a b c d e f g h

Fen: rnbqkbnr/pppppppp/8/8/4P3/8/PPPP1PPP/RNBQKBNR b KQkq - 0 1

Key: B46022469E3DD31BNow let's make Stockfish think for one second what is the next best move for black:gives as the last line:TODO:

go movetime 1000bestmove c7c5 ponder g1f3- what is ponder? Something to do with thinking on the opponent's turn: permanent brain.

- understand the previous lines

To make the move it as suggested for black, we have to either repeat the entire sequence of movements:or alternatively we could also use the previous FEN notation as a starting point;Note how the Universal Chess Interface interface is very simple: we just load a state and then decide what to do next for that one state. The engine holds only one and exactly one state at a time, and you can't even modify it differentially without loading new one from scratch.

position startpos moves e2e4 c7c5d: +---+---+---+---+---+---+---+---+

| r | n | b | q | k | b | n | r | 8

+---+---+---+---+---+---+---+---+

| p | p | | p | p | p | p | p | 7

+---+---+---+---+---+---+---+---+

| | | | | | | | | 6

+---+---+---+---+---+---+---+---+

| | | p | | | | | | 5

+---+---+---+---+---+---+---+---+

| | | | | P | | | | 4

+---+---+---+---+---+---+---+---+

| | | | | | | | | 3

+---+---+---+---+---+---+---+---+

| P | P | P | P | | P | P | P | 2

+---+---+---+---+---+---+---+---+

| R | N | B | Q | K | B | N | R | 1

+---+---+---+---+---+---+---+---+

a b c d e f g h

Fen: rnbqkbnr/pp1ppppp/8/2p5/4P3/8/PPPP1PPP/RNBQKBNR w KQkq - 0 2

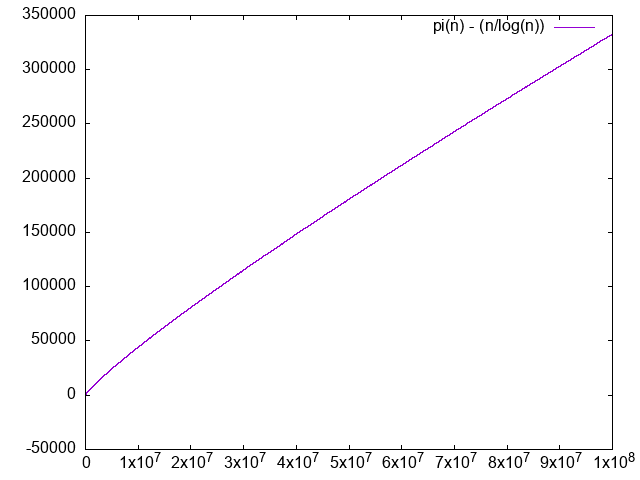

Key: 4CA78BCE9C2980B0position fen rnbqkbnr/pppppppp/8/8/4P3/8/PPPP1PPP/RNBQKBNR b KQkq - 0 1 moves c7c5 torchvision ResNet Updated 2025-07-16

pytorch.org/vision/0.13/models.html has a minimal runnable example adapted to python/pytorch/resnet_demo.py.

That example uses a ResNet pre-trained on the COCO dataset to do some inference, tested on Ubuntu 22.10:This first downloads the model, which is currently 167 MB.

cd python/pytorch

wget -O resnet_demo_in.jpg https://upload.wikimedia.org/wikipedia/commons/thumb/6/60/Rooster_portrait2.jpg/400px-Rooster_portrait2.jpg

./resnet_demo.py resnet_demo_in.jpg resnet_demo_out.jpgWe know it is COCO because of the docs: pytorch.org/vision/0.13/models/generated/torchvision.models.detection.fasterrcnn_resnet50_fpn_v2.html which explains that is an alias for:

FasterRCNN_ResNet50_FPN_V2_Weights.DEFAULTFasterRCNN_ResNet50_FPN_V2_Weights.COCO_V1After it finishes, the program prints the recognized classes:so we get the expected

['bird', 'banana']bird, but also the more intriguing banana. Twin prime conjecture Updated 2025-07-16

Let's show them how it's done with primes + awk. Edit. They have a gives us the list of all twin primes up to 100:Tested on Ubuntu 22.10.

-d option which also shows gaps!!! Too strong:sudo apt install bsdgames

primes -d 1 100 | awk '/\(2\)/{print $1 - 2, $1 }'0 2

3 5

5 7

11 13

17 19

29 31

41 43

59 61

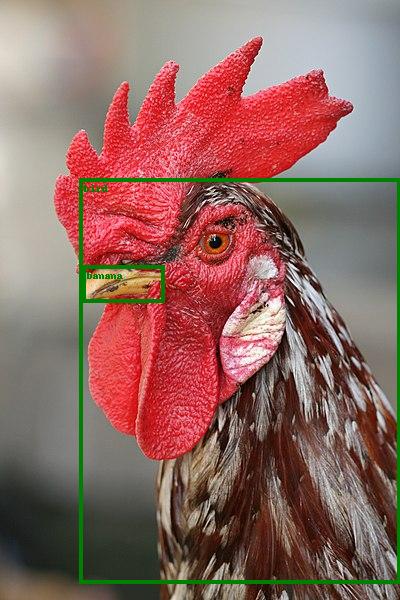

71 73 yolov5-pip Updated 2025-07-16

OK, now we're talking, two liner and you get a window showing bounding box object detection from your webcam feed!The accuracy is crap for anything but people. But still. Well done. Tested on Ubuntu 22.10, P51.

python -m pip install -U yolov5==7.0.9

yolov5 detect --source 0