South China Morning Post Created 2024-11-04 Updated 2025-07-16

World on a Wire Created 2024-11-04 Updated 2025-07-16

Chinese newspaper Created 2024-11-04 Updated 2025-07-16

Israeli company Created 2024-11-04 Updated 2025-07-16

International Bureau of Weights and Measures Created 2024-11-04 Updated 2025-07-16

Story about the simulation hypothesis Created 2024-11-04 Updated 2025-07-16

Life simulation game Created 2024-11-04 Updated 2025-07-16

Dmitriy Khaladzhi carrying a horse over his shoulders Created 2024-11-04 Updated 2025-07-16

List of memes Created 2024-11-04 Updated 2025-07-16

Idealist Created 2024-11-04 Updated 2025-07-16

A few of the "I'd rather starve and do what I love than work some bullshit job people":

- www.youtube.com/watch?v=dD5hYCN-tmU&t Worldyman, German skater. Ciro Santilli said hi at: www.youtube.com/watch?v=dD5hYCN-tmU&lc=Ugz_QQOwrRG5Wjm52hp4AaABAg His reply suggests mental illness unfortunately:

Single photon detection Created 2024-10-28 Updated 2025-07-16

Single photon production Created 2024-10-28 Updated 2025-07-16

Single photon production and detection Created 2024-10-28 Updated 2025-07-16

The particular case of the double-slit experiment will be discussed at: single particle double slit experiment.

Detectors are generally called photomultipliers:

Bibliography:

- iopscience.iop.org/book/978-0-7503-3063-3.pdf Quantum Mechanics in the Single Photon Laboratory by Waseem, Ilahi and Anwar (2020)

How to use an SiPM - Experiment Video by SensLTech (2018)

Source. Single-photon detectors - Krister Shalm by Institute for Quantum Computing (2013)

Source. Field electron emission Created 2024-10-28 Updated 2025-07-16

Free electron model Created 2024-10-28 Updated 2025-07-16

Drude model Created 2024-10-28 Updated 2025-07-16

Potential barrier Created 2024-10-28 Updated 2025-07-16

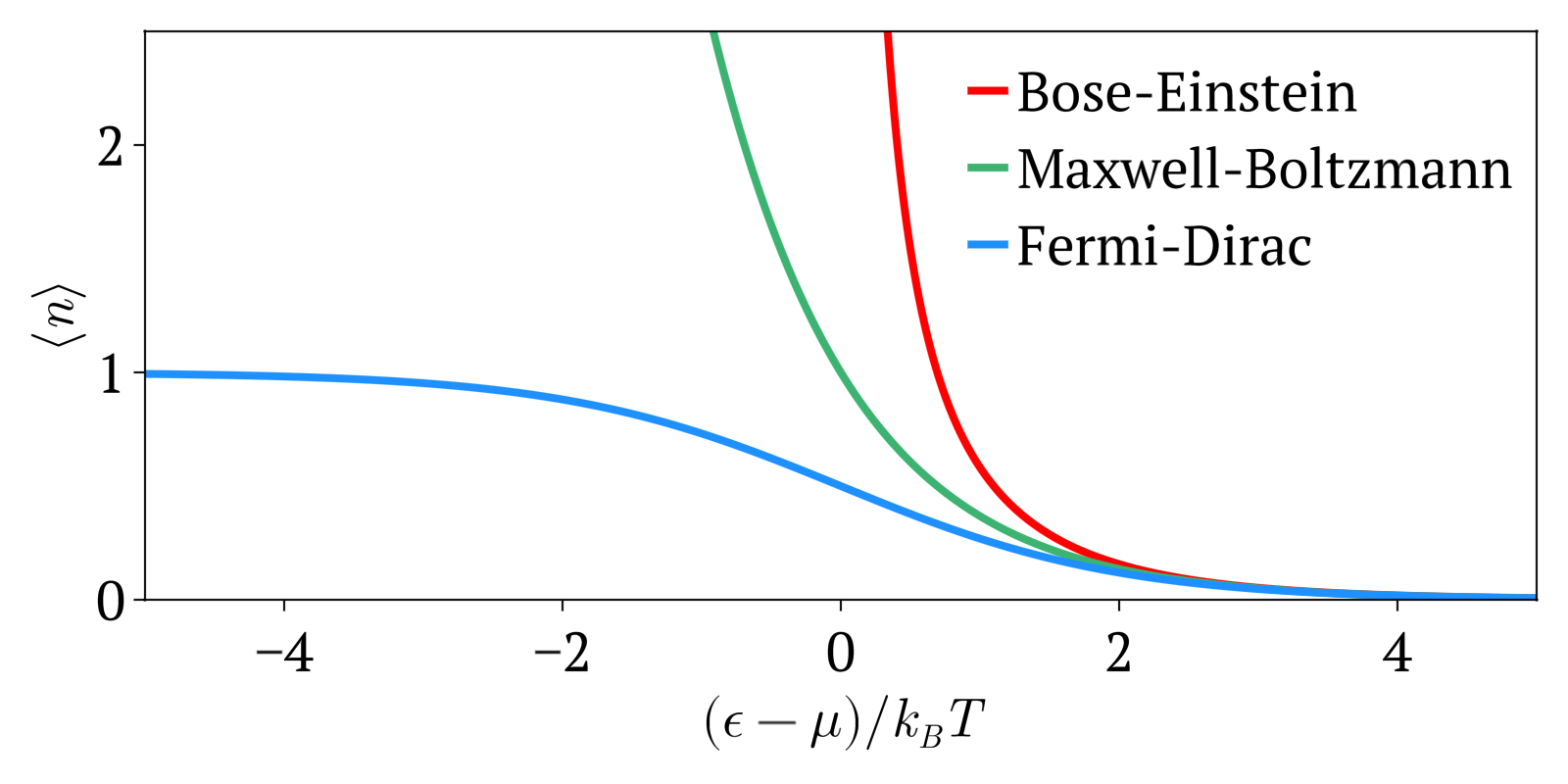

Maxwell-Boltzmann vs Bose-Einstein vs Fermi-Dirac statistics Created 2024-10-28 Updated 2025-07-16

Maxwell-Boltzmann statistics, Bose-Einstein statistics and Fermi-Dirac statistics all describe how energy is distributed in different physical systems at a given temperature.

For example, Maxwell-Boltzmann statistics describes how the speeds of particles are distributed in an ideal gas.

The temperature of a gas is only a statistical average of the total energy of the gas. But at a given temperature, not all particles have the exact same speed as the average: some are higher and others lower than the average.

For a large number of particles however, the fraction of particles that will have a given speed at a given temperature is highly deterministic, and it is this that the distributions determine.

One of the main interest of learning those statistics is determining the probability, and therefore average speed, at which some event that requires a minimum energy to happen happens. For example, for a chemical reaction to happen, both input molecules need a certain speed to overcome the potential barrier of the reaction. Therefore, if we know how many particles have energy above some threshold, then we can estimate the speed of the reaction at a given temperature.

The three distributions can be summarized as:

- Maxwell-Boltzmann statistics: statistics without considering quantum statistics. It is therefore only an approximation. The other two statistics are the more precise quantum versions of Maxwell-Boltzmann and tend to it at high temperatures or low concentration. Therefore this one works well at high temperatures or low concentrations.

- Bose-Einstein statistics: quantum version of Maxwell-Boltzmann statistics for bosons

- Fermi-Dirac statistics: quantum version of Maxwell-Boltzmann statistics for fermions. Sample system: electrons in a metal, which creates the free electron model. Compared to Maxwell-Boltzmann statistics, this explained many important experimental observations such as the specific heat capacity of metals. A very cool and concrete example can be seen at youtu.be/5V8VCFkAd0A?t=1187 from Video "Using a Photomultiplier to Detect single photons by Huygens Optics" where spontaneous field electron emission would follow Fermi-Dirac statistics. In this case, the electrons with enough energy are undesired and a source of noise in the experiment.

A good conceptual starting point is to like the example that is mentioned at The Harvest of a Century by Siegmund Brandt (2008).

Consider a system with 2 particles and 3 states. Remember that:

- in quantum statistics (Bose-Einstein statistics and Fermi-Dirac statistics), particles are indistinguishable, therefore, we might was well call both of them

A, as opposed toAandBfrom non-quantum statistics - in Bose-Einstein statistics, two particles may occupy the same state. In Fermi-Dirac statistics

Therefore, all the possible way to put those two particles in three states are for:

- Maxwell-Boltzmann distribution: both A and B can go anywhere:

- Bose-Einstein statistics: because A and B are indistinguishable, there is now only 1 possibility for the states where A and B would be in different states.

- Fermi-Dirac statistics: now states with two particles in the same state are not possible anymore:

AgoraDesk Created 2024-10-26 Updated 2025-07-16

SimpleSwap Created 2024-10-26 Updated 2025-07-16

Unlisted articles are being shown, click here to show only listed articles.