Amazon EC2 GPU Updated 2025-07-16

As of December 2023, the cheapest instance with an Nvidia GPU is g4nd.xlarge, so let's try that out. In that instance, lspci contains:so we see that it runs a Nvidia T4 GPU.

00:1e.0 3D controller: NVIDIA Corporation TU104GL [Tesla T4] (rev a1)Be careful not to confuse it with g4ad.xlarge, which has an AMD GPU instead. TODO meaning of "ad"? "a" presumably means AMD, but what is the "d"?

Some documentation on which GPU is in each instance can seen at: docs.aws.amazon.com/dlami/latest/devguide/gpu.html (archive) with a list of which GPUs they have at that random point in time. Can the GPU ever change for a given instance name? Likely not. Also as of December 2023 the list is already outdated, e.g. P5 is now shown, though it is mentioned at: aws.amazon.com/ec2/instance-types/p5/

When selecting the instance to launch, the GPU does not show anywhere apparently on the instance information page, it is so bad!

Also note that this instance has 4 vCPUs, so on a new account you must first make a customer support request to Amazon to increase your limit from the default of 0 to 4, see also: stackoverflow.com/questions/68347900/you-have-requested-more-vcpu-capacity-than-your-current-vcpu-limit-of-0, otherwise instance launch will fail with:

You have requested more vCPU capacity than your current vCPU limit of 0 allows for the instance bucket that the specified instance type belongs to. Please visit aws.amazon.com/contact-us/ec2-request to request an adjustment to this limit.

When starting up the instance, also select:Once you finally managed to SSH into the instance, first we have to install drivers and reboot:and now running:shows something like:

- image: Ubuntu 22.04

- storage size: 30 GB (maximum free tier allowance)

sudo apt update

sudo apt install nvidia-driver-510 nvidia-utils-510 nvidia-cuda-toolkit

sudo rebootnvidia-smi+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.147.05 Driver Version: 525.147.05 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:1E.0 Off | 0 |

| N/A 25C P8 12W / 70W | 2MiB / 15360MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+If we start from the raw Ubuntu 22.04, first we have to install drivers:

- docs.aws.amazon.com/AWSEC2/latest/UserGuide/install-nvidia-driver.html official docs

- stackoverflow.com/questions/63689325/how-to-activate-the-use-of-a-gpu-on-aws-ec2-instance

- askubuntu.com/questions/1109662/how-do-i-install-cuda-on-an-ec2-ubuntu-18-04-instance

- askubuntu.com/questions/1397934/how-to-install-nvidia-cuda-driver-on-aws-ec2-instance

From there basically everything should just work as normal. E.g. we were able to run a CUDA hello world just fine along:

nvcc inc.cu

./a.outOne issue with this setup, besides the time it takes to setup, is that you might also have to pay some network charges as it downloads a bunch of stuff into the instance. We should try out some of the pre-built images. But it is also good to know this pristine setup just in case.

We then managed to run Ollama just fine with:which gave:so way faster than on my local desktop CPU, hurray.

curl https://ollama.ai/install.sh | sh

/bin/time ollama run llama2 'What is quantum field theory?'0.07user 0.05system 0:16.91elapsed 0%CPU (0avgtext+0avgdata 16896maxresident)k

0inputs+0outputs (0major+1960minor)pagefaults 0swapsAfter setup from: askubuntu.com/a/1309774/52975 we were able to run:which gave:so only marginally better than on P14s. It would be fun to see how much faster we could make things on a more powerful GPU.

head -n1000 pap.txt | ARGOS_DEVICE_TYPE=cuda time argos-translate --from-lang en --to-lang fr > pap-fr.txt77.95user 2.87system 0:39.93elapsed 202%CPU (0avgtext+0avgdata 4345988maxresident)k

0inputs+88outputs (0major+910748minor)pagefaults 0swaps Amazon EC2 hello world Updated 2025-07-16

As of December 2023 on a

t2.micro instance, the only one part of free tier at the time with advertised 1 vCPU, 1 GiB RAM, 8 GiB disk for the first 12 months, on Ubuntu 22.04:$ free -h

total used free shared buff/cache available

Mem: 949Mi 149Mi 210Mi 0.0Ki 590Mi 641Mi

Swap: 0B 0B 0B

$ nproc

1

$ df -h /

Filesystem Size Used Avail Use% Mounted on

/dev/root 7.6G 1.8G 5.8G 24% /To install software:

sudo apt update

sudo apt install cowsay

cowsay asdf ampy Updated 2025-07-16

Install on Ubuntu 22.04:

python3 -m pip install --user adafruit-ampy aviad12g/ARC-AGI-solution 2025-10-14

It seems to have been tested on something older than Ubuntu 24.04, as 24.04 install requires some porting, started process at: github.com/cirosantilli/ARC-AGI-solution/tree/ubuntu-24-04 but gave up to try Ubuntu 22.04 instead.

Ubuntu 22.04 Docker install worked without patches, after installing Poetry e.g. to try and solve 1ae2feb7:but towards the end we have:so it failed.

git clone https://github.com/aviad12g/ARC-AGI-solution

cd ARC-AGI-solution

git checkout f3283f727488ad98fe575ea6a5ac981e4a188e49

poetry install

git clone https://github.com/arcprize/ARC-AGI-2

`poetry env activate`

export PYTHONPATH="$PWD/src:$PYTHONPATH"

python3 -m arc_solver.cli.main solve ARC-AGI-2/data/evaluation/1ae2feb7.json{

"success": false,

"error": "Search failed: no_multi_example_solution",

"search_stats": {

"nodes_expanded": 21,

"nodes_generated": 903,

"termination_reason": "no_multi_example_solution",

"candidates_generated": 25,

"examples_validated": 3,

"validation_success_rate": 0.0,

"multi_example_used": true

},

"predictions": [

null,

null,

null

],

"computation_time": 30.234344280001096,

"task_id": "1ae2feb7",

"task_file": "ARC-AGI-2/data/evaluation/1ae2feb7.json",

"solver_version": "0.1.0",

"total_time": 30.24239572100123,

"timestamp": 1760353369.9701269

}

Task: 1ae2feb7.json

Success: False

Error: Search failed: no_multi_example_solution

Multi-example validation: ENABLED

Training examples validated: 3

Candidates generated: 25

Validation success rate: 0.0%

Computation time: 30.23s

Total time: 30.24sLet's see if any of them work at all as advertised:and at the end:has only 7 successes.

ls ARC-AGI-2/data/evaluation/ | xargs -I'{}' python3 -m arc_solver.cli.main solve 'ARC-AGI-2/data/evaluation/{}' |& tee tmp.txtgrep 'Success: True' tmp.txt | wcAlso weirdly only has 102 hits, but there were 120 JSON tasks in that folder. I search for the missing executions:The first missing one is 135a2760, it blows up with:and grepping ERROR gives us:Reported at: github.com/aviad12g/ARC-AGI-solution/issues/1

grep 'Success: True' tmp.txt | wcdiff -u <(grep Task: tmp.txt | cut -d' ' -f2) <(ls ARC-AGI-2/data/evaluation)ERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializableERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type SizePredicate is not JSON serializable

ERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type ndarray is not JSON serializable

ERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type ndarray is not JSON serializable

ERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type VerticalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type VerticalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type ndarray is not JSON serializable

ERROR: Solve command failed: Object of type VerticalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type ndarray is not JSON serializable

ERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type HorizontalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type VerticalLinePredicate is not JSON serializable

ERROR: Solve command failed: Object of type VerticalLinePredicate is not JSON serializable Ciro Santilli's hardware Dell Inspiron 15 3520 Updated 2025-07-16

Bought May 2024 to be my clean crypto-only computer. Searched for cheapest 1 TB disk 16 GB RAM not too old on Amazon with Ubuntu certification, and that was it at £479.00.

Some reviews:

OPSEC: will run only cryptocurrency wallets and nothing else. Will connect to Internet, but never ever to a non clean USB flash drive.

Bootstrap OPSEC:It must have taken about one week running full time to sync the Monero blockchain which at the time was at about 3.1M blocks! I checked on system explorer, and CPU and internet usage was never maxed out, suggesting simply slow network. But the computer still overheated quite a bit and froze a few times.

- turn on from factory, start Windows 11 Home 23H2 build 22631.2715, connect to home Wifi during setup process. Considered skipping WiFi, but I'll want to download the Ubuntu ISO later on anyways answers.microsoft.com/en-us/windows/forum/all/bypass-lets-connect-you-to-a-network/2ce188f6-1b28-45a0-97d2-bfccfa3c9188. Don't sign in to online Windows account, and turn off all spyware requests.

- on preinstalled Edge browser, download Ubuntu 24.04 ISO from ubuntu.com, check sha256 with

Get-FileHashon powershell even though that is pointless security.stackexchange.com/questions/1687/does-hashing-a-file-from-an-unsigned-website-give-a-false-sense-of-security, download balenaEtcher portable from etcher.balena.io/ (currently recommended burner at ubuntu.com/download/desktop#how-to-install) from etc, and burn Ubuntu into a SanDisk Ultra Flair 64 GB - install Ubuntu from USB flash. No internet connection initially, default everything.

- notice that Ubuntu 24.04 is too broken, install Ubuntu 22.04.4 on the previously used USB from Ubuntu, and then install 22.04 instead... minimal installation, encrypted ZFS

- Ubuntu 24.04 "The application files has closed unexpectedly". This likely terminated uncompression of the bz2 halfway, and led to a corrupted monerod...

- askubuntu.com/questions/15520/how-can-i-tell-ubuntu-to-do-nothing-when-i-close-my-laptop-lid fix the eternal laptop lid issue without GUI solution...

- copy view only wallet private key by takinga picture of the QR code with Android cell phone. This gives it to the CIA immediately, but that's fine as we're going to publish it publicly.

Compile MicroPython code for Micro Bit locally Updated 2025-07-27

To use a prebuilt firmware, you can just use What that does is:

uflash, tested on Ubuntu 22.04:git clone https://github.com/bbcmicrobit/micropython

cd micropython

git checkout 7fc33d13b31a915cbe90dc5d515c6337b5fa1660

uflash examples/led_dance.py- convert the MicroPython code to bytecode

- join it up with a prebuilt firmware that ships with uflash which contains the MicroPython interpreter

- flashes that

To build your own firmware see: Compile MicroPython code for Micro Bit locally on Ubuntu 22.04 with your own firmware

Compile MicroPython code for Micro Bit locally on Ubuntu 22.04 with your own firmware Updated 2025-07-27

TODO didn't manage from source Ubuntu 22.04, their setup bitrotted way too fast... it's shameful even. Until I gave up and went for the magic Docker of + github.com/bbcmicrobit/micropython, and it bloody worked:

git clone https://github.com/bbcmicrobit/micropython

cd micropython

git checkout 7fc33d13b31a915cbe90dc5d515c6337b5fa1660

docker pull ghcr.io/carlosperate/microbit-toolchain:latest

docker run -v $(pwd):/home --rm ghcr.io/carlosperate/microbit-toolchain:latest yt target bbc-microbit-classic-gcc-nosd@https://github.com/lancaster-university/yotta-target-bbc-microbit-classic-gcc-nosd

docker run -v $(pwd):/home --rm ghcr.io/carlosperate/microbit-toolchain:latest make all

# Build one.

tools/makecombinedhex.py build/firmware.hex examples/counter.py -o build/counter.hex

cp build/counter.hex "/media/$USER/MICROBIT/"

# Build all.

for f in examples/*; do b="$(basename "$f")"; echo $b; tools/makecombinedhex.py build/firmware.hex "$f" -o "build/${b%.py}.hex"; doneThe pre-Docker attempts:

sudo add-apt-repository -y ppa:team-gcc-arm-embedded

sudo apt update

sudo apt install gcc-arm-embedded

sudo apt install cmake ninja-build srecord libssl-dev

# Rust required for some Yotta component, OMG.

sudo snap install rustup

rustup default 1.64.0

python3 -m pip install yottaThe line:warns:and then the update/

sudo add-apt-repository -y ppa:team-gcc-arm-embeddedE: The repository 'https://ppa.launchpadcontent.net/team-gcc-arm-embedded/ppa/ubuntu jammy Release' does not have a Release file.

N: Updating from such a repository can't be done securely, and is therefore disabled by default.

N: See apt-secure(8) manpage for repository creation and user configuration details.sudo apt-get install gcc-arm-embedded fails, bibliography:Attempting to install Yotta:or:was failing with:Running:did not help. Bibliography:

sudo -H pip3 install yottapython3 -m pip install --user yottaException: Version mismatch: this is the 'cffi' package version 1.15.1, located in '/tmp/pip-build-env-dinhie_9/overlay/local/lib/python3.10/dist-packages/cffi/api.py'. When we import the top-level '_cffi_backend' extension module, we get version 1.15.0, located in '/usr/lib/python3/dist-packages/_cffi_backend.cpython-310-x86_64-linux-gnu.so'. The two versions should be equal; check your installation.python3 -m pip install --user cffi==1.15.1From a clean virtualenv, it appears to move further, and then fails at:So we install Rust and try again, OMG:which at the time of writing was

Building wheel for cmsis-pack-manager (pyproject.toml) ... error

error: [Errno 2] No such file or directory: 'cargo'sudo snap install rustup

rustup default stablerustc 1.64.0, and then OMG, it worked!! We have the yt command.However, it is still broken, e.g.:blows up:bibliography:

git clone https://github.com/lancaster-university/microbit-samples

cd microbit-samples

git checkout 285f9acfb54fce2381339164b6fe5c1a7ebd39d5

cp source/examples/invaders/* source

yt clean

yt buildannot import name 'soft_unicode' from 'markupsafe' GHDL Updated 2025-07-16

Examples under vhdl.

Run all examples, which have assertions in them:

cd vhdl

./runFiles:

- Examples

- Basic

- vhdl/hello_world_tb.vhdl: hello world

- vhdl/min_tb.vhdl: min

- vhdl/assert_tb.vhdl: assert

- Lexer

- vhdl/comments_tb.vhdl: comments

- vhdl/case_insensitive_tb.vhdl: case insensitive

- vhdl/whitespace_tb.vhdl: whitespace

- vhdl/literals_tb.vhdl: literals

- Flow control

- vhdl/procedure_tb.vhdl: procedure

- vhdl/function_tb.vhdl: function

- vhdl/operators_tb.vhdl: operators

- Types

- vhdl/integer_types_tb.vhdl: integer types

- vhdl/array_tb.vhdl: array

- vhdl/record_tb.vhdl.bak: record. TODO fails with "GHDL Bug occurred" on GHDL 1.0.0

- vhdl/generic_tb.vhdl: generic

- vhdl/package_test_tb.vhdl: Packages

- vhdl/standard_package_tb.vhdl: standard package

- textio

* vhdl/write_tb.vhdl: write

* vhdl/read_tb.vhdl: read - vhdl/std_logic_tb.vhdl: std_logic

- vhdl/stop_delta_tb.vhdl:

--stop-delta

- Basic

- Applications

- Combinatoric

- vhdl/adder.vhdl: adder

- vhdl/sqrt8_tb.vhdl: sqrt8

- Sequential

- vhdl/clock_tb.vhdl: clock

- vhdl/counter.vhdl: counter

- Combinatoric

- Helpers

* vhdl/template_tb.vhdl: template

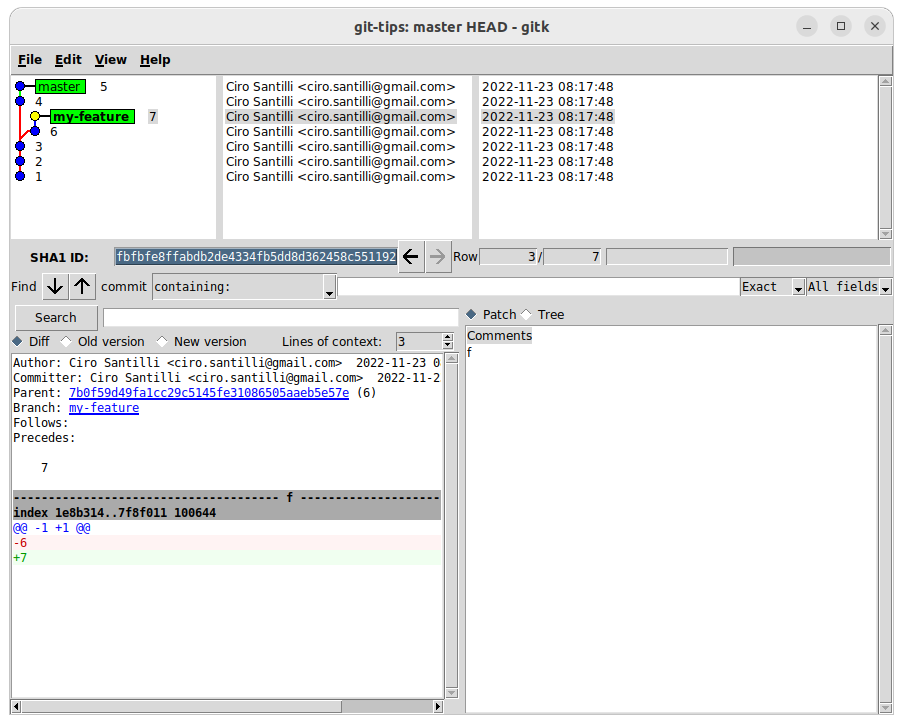

gitk Updated 2025-07-16

Kdenlive Updated 2025-07-16

This seems like a decent option, although it has bugs coming in and out all the time! Also it is quite hard to learn to use.

Shortucts:

- Shift + R: cut tracks at current point. You can then select fragments to move around or delete.

- Shift mouse click drag: select multiple clips: video.stackexchange.com/questions/21598/select-range-of-clips-in-kdenlive

Add subtitles:then drag on top of the video track. To add only to part of the video, cut it up first.

- Effects

- Dynamic text

= = SET EFFECT PARAM: "rect" = 0=1188 0 732 242

MUTEX LOCK!!!!!!!!!!!! slotactivateeffect: 1

// // // RESULTING REQUIRED SCENE: 1

Object 0x557293592da0 destroyed while one of its QML signal handlers is in progress.

Most likely the object was deleted synchronously (use QObject::deleteLater() instead), or the application is running a nested event loop.

This behavior is NOT supported!

qrc:/qml/EffectToolBar.qml:80: function() { [native code] }

Killed Matplotlib Updated 2025-07-16

Tends to be Ciro's pick if gnuplot can't handle the use case, or if the project is really really serious.

Couldn't handle exploration of large datasets though: Survey of open source interactive plotting software with a 10 million point scatter plot benchmark by Ciro Santilli

Examples:

- matplotlib/hello.py

- matplotlib/educational2d.py

- matplotlib/axis.py

- matplotlib/label.py

- Line style

- Subplots

- matplotlib/two_lines.py

- Data from files

- Specialized

Micro Bit getting started Updated 2025-07-16

When plugged into Ubuntu 22.04 via the USB Micro-B the Micro Bit mounts as:e.g.:for username

/media/$USER/MICROBIT//media/ciro/MICROBIT/ciro.Loading the program is done by simply copying a The file name does not matter, only the

.hex binary into the image e.g. with:cp ~/Downloads/microbit_program.hex /media/$USER/MICROBIT/.hex extension. Program Raspberry Pi Pico W with C Updated 2025-07-26

Ubuntu 22.04 build just worked, nice! Much feels much cleaner than the Micro Bit C setup:

sudo apt install cmake gcc-arm-none-eabi libnewlib-arm-none-eabi libstdc++-arm-none-eabi-newlib

git clone https://github.com/raspberrypi/pico-sdk

cd pico-sdk

git checkout 2e6142b15b8a75c1227dd3edbe839193b2bf9041

cd ..

git clone https://github.com/raspberrypi/pico-examples

cd pico-examples

git checkout a7ad17156bf60842ee55c8f86cd39e9cd7427c1d

cd ..

export PICO_SDK_PATH="$(pwd)/pico-sdk"

cd pico-exampes

mkdir build

cd build

# Board selection.

# https://www.raspberrypi.com/documentation/microcontrollers/c_sdk.html also says you can give wifi ID and password here for W.

cmake -DPICO_BOARD=pico_w ..

make -jThen we install the programs just like any other UF2 but plugging it in with BOOTSEL pressed and copying the UF2 over, e.g.:Note that there is a separate example for the W and non W LED, for non-W it is:

cp pico_w/blink/picow_blink.uf2 /media/$USER/RPI-RP2/cp blink/blink.uf2 /media/$USER/RPI-RP2/Also tested the UART over USB example:You can then see the UART messages with:

cp hello_world/usb/hello_usb.uf2 /media/$USER/RPI-RP2/screen /dev/ttyACM0 115200TODO understand the proper debug setup, and a flash setup that doesn't require us to plug out and replug the thing every two seconds. www.electronicshub.org/programming-raspberry-pi-pico-with-swd/ appears to describe it, with SWD to do both debug and flash. To do it, you seem need another board with GPIO, e.g. a Raspberry Pi, the laptop alone is not enough.

Raspberry Pi Pico W UART Updated 2025-07-16

You can connect form an Ubuntu 22.04 host as:When in but be aware of: Raspberry Pi Pico W freezes a few seconds after after screen disconnects from UART.

screen /dev/ttyACM0 115200screen, you can Ctrl + C to kill main.py, and then execution stops and you are left in a Python shell. From there:- Ctrl + D: reboots

- Ctrl + A K: kills the GNU screen window. Execution continues normally

Other options:

- ampy

runcommand, which solves How to run a MicroPython script from a file on the Raspberry Pi Pico W from the command line?

The Three Treasures of the Programmer Updated 2025-09-09

Ciro Santilli's joke version of the Chinese Four Treasures of the Study!In the past, Ciro used to use file managers, which would be the fourth tresure. But he stopped doing so for years due to his cd alias... so it became three. He actually had exactly three windows open when he was checking if there was anything else he could not open hand of.

- web browser

- Text editor

- terminal. Though to be honest, circa 2022, Ciro learned of the ctrl + click to open file (including with file.c:123 line syntax) ability of Visual Studio Code (likely present in other IDEs), and he was starting considering dumping the terminal altogether if some implementation gets it really really right. The main thing is that it can't be a tinny little bar at the bottom, it has to be full window and super easily toggleable!

The three Treasures of the Programmer

. Featuring: Gvim, tmux running in GNOME terminal, and Chromium browser on Ubuntu 22.04. The minimized windows are for demonstration purposes, Cirism mandates that all windows shall be maximized at all times. Splits withing a single program are permitted however. Universal asynchronous receiver-transmitter Updated 2025-07-16

A good project to see UARTs at work in all their beauty is to connect two Raspberry Pis via UART, and then:

- type in one and see characters appear in the other: scribles.net/setting-up-uart-serial-communication-between-raspberry-pis/

- send data via a script: raspberrypi.stackexchange.com/questions/29027/how-should-i-properly-communicate-2-raspberry-pi-via-uart

Part of the beauty of this is that you can just connect both boards directly manually with a few wire-to-wire connections with simple jump wire. Its simplicity is just quite refreshing. Sure, you could do something like that for any physical layer link presumably...

Remember that you can only have one GNU screen connected at a time or else they will mess each other up: unix.stackexchange.com/questions/93892/why-is-screen-is-terminating-without-root/367549#367549

On Ubuntu 22.04 you can screen without sudo by adding yourself to the

dialout group with:sudo usermod -a -G dialout $USERI shouldn't be doing this on funded OurBigBook time which is until the end of May, but I was getting too nervous and decided to start a casual job search to test the waters.

In particular I want to see if I can get past the HR lady step without toning down my online profiles. If nothing works out for the next round I'll be hiding anything too spicy like:Another interesting point is to see if French companies are more likely to reply given that Ciro Santilli studied at École Polytechnique which the French worship.

- prominently seeking funding for OurBigBook on my LinkedIn profile

- CIA 2010 covert communication websites references. This will be my first job hunt since I have published that article. Wish me luck.

- gay Putin profile picture on Stack Overflow

Gay Putin, currently used in Ciro Santilli's Stack Overflow profile

. Ciro's profiles may be a bit too much for the HR ladies who reject his job applications on the spot. To be fair, perhaps not enough years of experience for certain applications and job hopping may have something to do with it too. But since they don't ever tell you anything not to get sued, we'll never know.I'm looking in particular either for:

- machine learning-adjacent jobs in companies that seem to be doing something that could further AGI, e.g. automatic code generation or robotics would be ideal

- quantum computing

- systems programming, which is what I actually have work experience with

I spent the last two weeks doing that:

- one week browsing everything of interest in London and Paris and sending applications to anything that seemed both relevant and interesting. Maintaining an application list at: Section "Job application by Ciro Santilli".

- one week on a very laborious but somewhat interesting take home exercise for Linux kernel engineer a Canonical, makers of Ubuntu.I had a week to finish 5 practical coding and packaging questions, and I tried to do everything as perfectly as possible, but I somewhat underestimated the amount of work and wait needed to do everything and didn't manage to finish question 4 and missed 5. Oops let's see how that goes.At least this had a few good outcomes for the Internet as I tried to document things as nicely as I could where they were missing from Google as usual:

- I re-tested Linux Kernel Module Cheat and made some small improvements. Things still worked from a Ubuntu 24.10 host (using Docker to Ubuntu 22.04), and I also checked that kernel 6.8 builds and GDB step debugs after adding the newly required config

CONFIG_DEBUG_INFO_DWARF_TOOLCHAIN_DEFAULT, also mentioned that at: Why are there no debug symbols in my vmlinux when using gdb with /proc/kcore? - I contributed some simple updates to github.com/martinezjavier/ldd3 getting it closer to work on Linux kernel v6.8. That repository aims to keep the venerable examples from Linux kernel module book LDD3 alive on newer kernels, and is a very good source for kernel module developers.

- How to compile a Linux kernel module?: wrote a quick Ciro-approved tutorial

- Dynamic array in Linux kernel module: I gave an educational example of a dynamic byte array (like std::string) using the kvmalloc family of allocators

- quickemu: this is a good emulator manager and I think I'll be using it for Ubuntu images when needed from now on. I wrote:

- How to run Ubuntu desktop on QEMU?: an introductory tutorial to the software as their README is not that good as is often the case. It's hard for project authors to predict what new users want or not. This is my second answer to this question, the previous one focusing on a more manual approach without third party helpers.

- How to share folder between guest/host? (Quickemu): I explained how to setup a 9p mount to share a directory between guest and host

- Error :: You must put some 'source' URIs in your sources.list: updated this answer for Ubuntu 24.04. This issue comes up when you want to do either of:which don't work by default, and my answer explains how to do it from the GUI and CLI. The CLI method is specially important for Docker images. Since Ubuntu doesn't offer a stable CLI method for this, the method breaks from time to time and we have to find the new config file to edit.

sudo apt build-dep sudo apt source - What is hardware enablement (HWE)?: I learned a bit better how Ubuntu structures its kernel releases for each Ubuntu release

Some of the main issues I had were:- compiling Linux kernel for Ubuntu is extremely slow. I was used to compiling for embedded system with Buildroot, which finishes in minutes, but for Ubuntu is hours, presumably because they enable as many drivers as possible to make a single ISO work on as many different computers as possible, which makes sense, but also makes development harder

- my QEMU setup for Ubuntu was not quite as streamlined and I relearned a few things and set up quickemu. By chance I had recently come across quickemu for testing OurBigBook on MacOS, but I had to learn a bit how to set it up reasonably too

- I re-tested Linux Kernel Module Cheat and made some small improvements. Things still worked from a Ubuntu 24.10 host (using Docker to Ubuntu 22.04), and I also checked that kernel 6.8 builds and GDB step debugs after adding the newly required config