Personal computer Updated 2025-07-16

Personalized learning Updated 2025-07-16

Inferior compared to self-directed learning, but better than the traditional "everyone gets the same" approach.

Phase shift gate Updated 2025-07-16

Phi Updated 2025-07-16

C3 Updated 2025-07-16

Year 4 Updated 2025-07-16

Physics journal Updated 2025-07-16

The strongest are:

- early 20th century: Annalen der Physik: God OG physics journal of the early 20th century, before the Nazis fucked German science back to the Middle Ages

- 20s/30s: Nature started picking up strong

- 40s/50s: American journals started to come in strong after all the genius Jews escaped from Germany, notably Physical Review Letters

Symbolic artificial intelligence Updated 2025-07-16

Synthetic data Updated 2025-07-16

Telegram (software) Updated 2025-07-16

Not end-to-end encrypted by default, WTF... you have to create "secret chats" for that:

You can't sync secret chats across devices, Signal handles that perfectly by sending E2EE messages across devices:This is a deal breaker because Ciro needs to type with his keyboard.

Desktop does not have secret chats: www.reddit.com/r/Telegram/comments/9beku1/telegram_desktop_secret_chat/ This is likey because it does not store chats locally, it just loads from server every time as of 2019: www.reddit.com/r/Telegram/comments/baqs63/where_are_chats_stored_on_telegram_desktop/ just like the web version. So it cannot have a private key.

Allows you to register a public username and not have to share phone number with contacts: telegram.org/blog/usernames-and-secret-chats-v2.

Self deleting messages added to secret chats in Q1 2021: telegram.org/blog/autodelete-inv2

Can delete messages from the device of the person you sent it to, no matter how old.

Telephone-based system Updated 2025-07-16

This section is about telecommunication systems that are based on top of telephone lines.

Telephone lines were ubiquitous from early on, and many technologies used them to send data, including much after regular phone calls became obsolete with VoIP.

These market forces tended to eventually crush non-telephone-based systems such as telex. Maybe in that case it was just that the name sounded like a thing of the 50's. But still. Dead.

Long Distance by AT&T (1941)

Source. youtu.be/aRvFA1uqzVQ?t=219 is perhaps the best moment, which attempts to correlate the exploration of the United States with the founding of the U.S. states. Point-contact transistor Updated 2025-07-16

As the name suggests, this is not very sturdy, and was quickly replaced by bipolar junction transistor.

Text-based user interface Updated 2025-07-16

Political parties in the United States Updated 2025-07-16

Polyphyly Updated 2025-07-16

Basically mean that parallel evolution happened. Some cool ones:

- homeothermy: mammals and birds

- animal flight: bats, birds and insects

- multicellularity: evolved a bunch of times

Positrons are electrons travelling back in time Updated 2025-07-16

Drosophila connectome Updated 2025-07-16

The hard part then is how to make any predictions from it:

- 2024 www.nature.com/articles/d41586-024-02935-z Fly-brain connectome helps to make predictions about neural activity. Summary of "Connectome-constrained networks predict neural activity across the fly visual system" by J. K. Lappalainen et. al.

2024: www.nature.com/articles/d41586-024-03190-y Largest brain map ever reveals fruit fly's neurons in exquisite detail

As of 2022, it had been almost fully decoded by post mortem connectome extraction with microtome!!! 135k neurons.

- 2021 www.nytimes.com/2021/10/26/science/drosophila-fly-brain-connectome.html Why Scientists Have Spent Years Mapping This Creature’s Brain by New York Times

That article mentions the humongous paper elifesciences.org/articles/66039 elifesciences.org/articles/66039 "A connectome of the Drosophila central complex reveals network motifs suitable for flexible navigation and context-dependent action selection" by a group from Janelia Research Campus. THe paper is so large that it makes eLife hang.

Primate Updated 2025-07-16

Probably quantum secure encryption algorithm Updated 2025-07-16

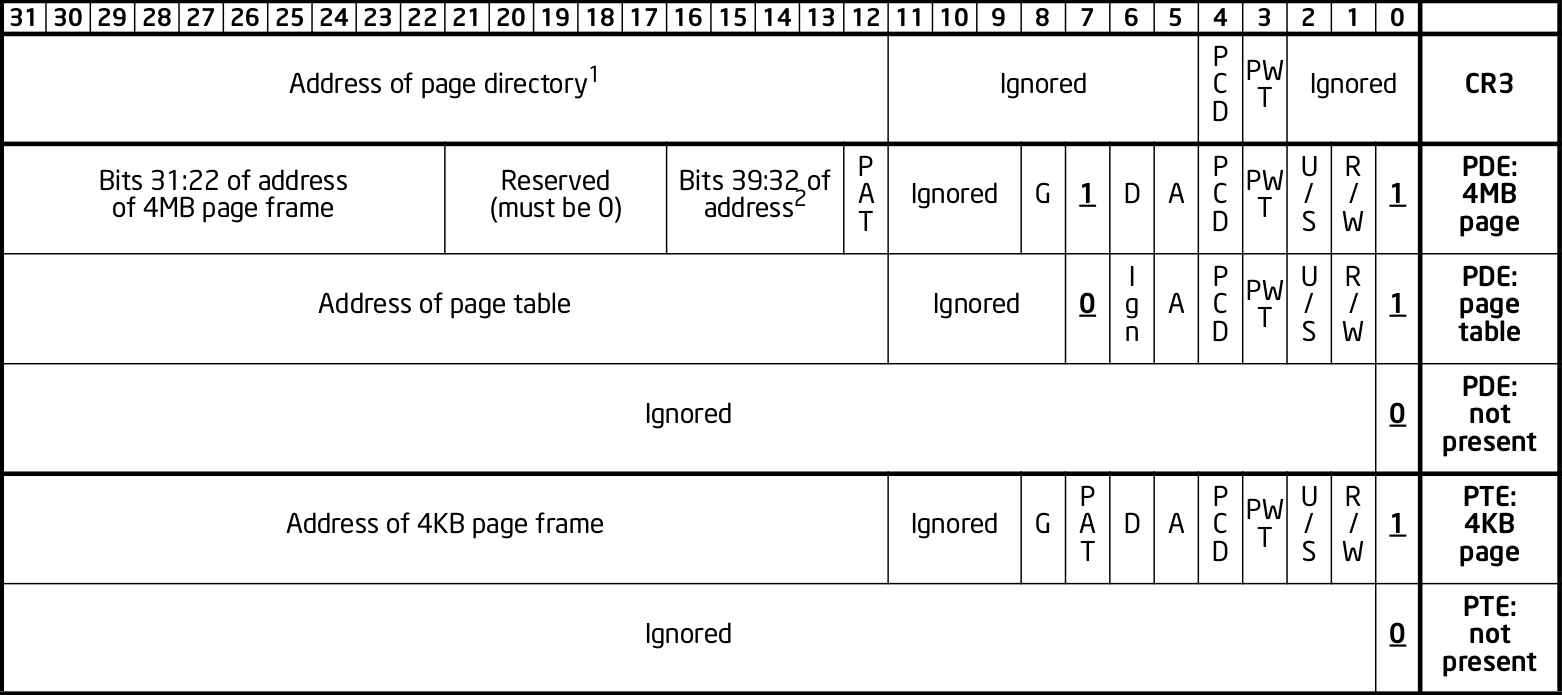

x86 Paging Tutorial Page table entries Updated 2025-07-16

The exact format of table entries is fixed by the hardware.

The page table is then an array of

struct.On this simplified example, the page table entries contain only two fields:so in this example the hardware designers could have chosen the size of the page table to b

bits function

----- -----------------------------------------

20 physical address of the start of the page

1 present flag21 instead of 32 as we've used so far.All real page table entries have other fields, notably fields to set pages to read-only for Copy-on-write. This will be explained elsewhere.

It would be impractical to align things at 21 bits since memory is addressable by bytes and not bits. Therefore, even in only 21 bits are needed in this case, hardware designers would probably choose 32 to make access faster, and just reserve bits the remaining bits for later usage. The actual value on x86 is 32 bits.

Here is a screenshot from the Intel manual image "Formats of CR3 and Paging-Structure Entries with 32-Bit Paging" showing the structure of a page table in all its glory: Figure 1. "x86 page entry format".

The fields are explained in the manual just after.

Unlisted articles are being shown, click here to show only listed articles.