There are two ways:

- manually dumping your brain on media such as notebooks, wikis or videos, i.e. forming a personal knowledge base

- technically using sensors for brain scanning

rwxrob.github.io/zet visible at rwxrob.github.io/zet/dex/changes Custom closed source system? No table of contents.

Interesting and weird dude who is into livestreaming and living off his limousine and mentoring people about programming:

This is not "open" content. It is illegal to copy anything from this zettelkasten for any other purpose whatsoever that is not guaranteed under American "fair use" copyright law. Violators will be prosecuted. This is to protect myself from people straight-up lying about me and my current position on any topic based on what is here. I'm very serious about prosecution. I will find you and ensure you receive consequences if you abuse this content and misrepresent me in any way.

Also into bikes: rwxrob.github.io/zet/2587/ which is cool:The doing loops thing makes a lot of sense, it is how Ciro Santilli also likes to explore and eventually get bored of his current location.

Wherever my wife is. I am not a solo digital nomad. I’m a guy who lives for months at a time while traveling around all over either in my car or on my bike. In fact, finding a temporary “home” for my car is usually the harder question. I like to drive to a centralized destination and do big loops of bike touring returning to that spot. Then, when I need a break, I drive home to my wife, Doris, recuperate, and prepare for next season.

Likely implies whole brain emulation and therefore AGI.

Wikipedia defines Mind uploading as a synonym for whole brain emulation. This sounds really weird, as "mind uploading" suggests much more simply brain dumping, or perhaps reuploading a brain dump to a brain.

Superintelligence by Nick Bostrom (2014) section "Whole brain emulation" provides a reasonable setup: post mortem, take a brain, freeze it, then cut it into fine slices with a Microtome, and then inspect slices with an electron microscope after some kind of staining to determine all the synapses.

Likely implies AGI.

Lists:

- www.bibsonomy.org/user/bshanks/education fantastic list, presumably by this guy:

- www.reddit.com/r/Zettelkasten/comments/168cmca/which_note_taking_app_for_a_luhmann_zettlekasten/

- www.reddit.com/r/productivity/comments/18vvavl/best_free_notetaking_app_switching_from_evernote/

- github.com/topics/note-taking has a billion projects. Oops.

- github.com/MaggieAppleton/digital-gardeners

- www.noteapps.ca/

Personal knowledge base software recommendation threads:

TODO look into those more:

- roamresearch.com/ no public graphs

- nesslabs.com/roam-research-alternatives a bunch of open source alternatives to it

- Trillium Notes. Notable project! Pun unintended!

- Stroll giffmex.org/stroll/stroll.html. How to publish? How to see tree?

- tiddlyroam joekroese.github.io/tiddlyroam/ graph rather than text searchable ToC. Public instance? Multiuser?

- Athens github.com/athensresearch/athens rudimentary WYSIWYG

- Logseq github.com/logseq/logseq no web interface/centralized server?

- itsfoss.com/obsidian-markdown-editor. Closed source. They have an OK static website publication mechanism: publish.obsidian.md/ram-rachum-research/Public/Agents+aren't+objective+entities%2C+they're+a+model but leaf node view only for now, no cross source page render. They are committed to having plaintext source which is cool: twitter.com/kepano/status/1675626836821409792

- CherryTree github.com/giuspen/cherrytree written in C++, GTK, WYSIWYG

- github.com/dullage/flatnotes: Python

- github.com/usememos/memos: Go

- github.com/siyuan-note/siyuan: Go

- github.com/vnotex/vnote: C++

- github.com/Laverna/laverna: JavaScript

- github.com/taniarascia/takenote

- github.com/silverbulletmd/silverbullet: TypeScript, Markdown, web-based WYSIWYG, focus on querying

Major downsides that most of those personal knowledge databases have:

- very little/no focus on public publishing, which is the primary focus of OurBigBook.com

- either limited or no multiuser features, e.g. edit protection and cross user topics

- graph based instead of tree based. For books we need a single clear ordering of a tree. Graph should come as a secondary thing through tags.

Closed source dump:

- www.toodledo.com

- Simplenote: en.wikipedia.org/wiki/Simplenote, by WordPress.com operator company Automattic

github.com/foambubble/foam impressive.

Open source Roam Research clone.

Markdown based, Visual Studio Code based.

Template project: github.com/foambubble/foam-template.

Publishing possible but not mandatory focus, main focus is self notes. Publishing guide at: foambubble.github.io/foam/user/recipes/recipes.html#publish Related: jackiexiao.github.io/foam/reference/publishing-pages/.

They seem to use graphs more than trees which will complicate publication.

TODO are IDs might be correctly implemented and independent from source file location? Are there any examples? github.com/foambubble/foam/issues/512

A CLI tool at: github.com/foambubble/foam-cli

Intro/docs: www.jonmsterling.com/jms-005P.xml. It is very hard to find information in that system however, largely because they don't seem to have a proper recursive cross file table of contents.

This is the project with the closest philosophy to OurBigBook that Ciro Santilli has ever found. It just tends to be even more idealistic than, OurBigBook in general, which is insane!

"Docs" at: www.jonmsterling.com/foreign-forester-jms-005P.xml Sample repo at: github.com/jonsterling/forest but all parts of interest are in submodules on the authors private Git server.

Example:

- sample source file: git.sr.ht/~jonsterling/public-trees/tree/2356f52303c588fadc2136ffaa168e9e5fbe346c/item/jms-005P.tree

- appears rendered at: www.jonmsterling.com/foreign-forester-jms-005P.xml

Author's main social media account seems to be: mathstodon.xyz/@jonmsterling e.g. mathstodon.xyz/@jonmsterling/111359099228291730 His home page:

They have

\Include like OurBigBook, nice: www.jonmsterling.com/jms-007L.xml, but OMG that name \transclude{xxx-NNNN}!! It seems to be possible to have human readable IDs too if you want: www.jonmsterling.com/foreign-forester-armaëlguéneau.xml is under trees/public/roladex/armaëlguéneau.tree.Headers have open/close:OurBigBook considered this, but went with

\subtree[jms-00YG]{}parent= instead finally to avoid huge lists of close parenthesis at the end of deep nodes.One really cool thing is that the headers render internal links as clickable, which brings it all closer to the "knowledge base as a formal ontology" approach.

The markup has relatively few insane constructs, notably you need explicit open paragraphs everywhere

\p{}?! OMG, too idealistic, not enough pragmatism. There are however a few insane constructs:The markup is documented at: www.jonmsterling.com/foreign-forester-jms-007N.xml

Jon has some very good theory of personal knowledge base, rationalizing several points that Ciro Santilli had in his mind but hadn't fully put into words, which is quite cool.

OCaml dependency is not so bad, but it relies on actually LaTeX for maths, which is bad. Maybe using JavaScript for OurBigBook wasn't such a bad choice after all, KaTeX just works.

Viewing the generated output HTML directly requires

security.fileuri.strict_origin_policy which is sad, but using a local server solves it. So it appears to actually pull pieces together with JavaScript? Also output files have .xml extension, the idealism! They are reconsidering that though: www.jonmsterling.com/foreign-forester-jms-005P.xml#tree-8720.The Ctrl+K article dropdown search navigation is quite cool.

\rel and \meta allows for arbitrary ontologies between nodes as semantic triples. But they suffer from one fatal flaw: the relations are headers in themselves. We often want to explain why a relation is true, give intuition to it, and refer to it from other nodes. This is obviously how the brain works: relations are nodes just like objects.They do appear to be putting full trees on every toplevel regardless how deep and with JavaScript turned off e.g.:

which is cool but will take lots of storage. In OurBigBook Ciro Santilli only does that on OurBigBook Web where each page can be dynamically generated.

Good:

- WYSIWYG

- Extended-Markdown-based

- help.obsidian.md/Getting+started/Sync+your+notes+across+devices they do have a device sync mechanism

- it watches the filesystem and if you change anything it gets automatically updated on UI

- help.obsidian.md/links#Link+to+a+block+in+a+note you can set (forcibly scoped) IDs to blocks. But it's not exposed on WYSIWYG?

Bad:

- forced ID scoping on the tree as usual

- no browser-only editor, it's just a local app apparently:

- obsidian.md/publish they have a publish function, but you can't see the generated websites with JavaScript turned off. And they charge you 8 dollars / month for that shit. Lol.

- block elements like images and tables cannot have captions?

- they kind of have synonyms: help.obsidian.md/aliases but does it work on source code?

Crazy overlaps with Ciro Santilli's OurBigBook Project, Wikipedia states:

Administrators of Project Xanadu have declared it superior to the World Wide Web, with the mission statement: "Today's popular software simulates paper. The World Wide Web (another imitation of paper) trivialises our original hypertext model with one-way ever-breaking links and no management of version or contents.

Closed source, no local editing? PDF annotation focus.

Co-founded by this dude: x.com/iamdrbenmiles

Docs: quartz.jzhao.xyz/

Sponsored by Obsidian itself!

Written in TypeScript!

Markdown support!

Everything is forcibly is scoped to files quartz.jzhao.xyz/features/wikilinks:

[[Path to file#anchor|Anchor]]Global table of contents based of in-disk file structure: quartz.jzhao.xyz/features/explorer with customizable sorting/filtering.

They were first, but apparently fell down a bit as other cheaper and more open alternatives came up: www.reddit.com/r/RoamResearch/comments/107ktxm/is_roam_research_over/

Interesting "gradual" WYSIWYG. You get inline previews for for things like images, maths and links. And if you click to edit the thing, the preview mostly goes away and becomes the corresponding source code instead.

Local only.

Zim

. Mathematics requires a plugin and a full LaTeX install: zim-wiki.org/manual/Plugins/Equation_Editor.html They have a bunch of plugins: zim-wiki.org/manual/Plugins.html

Can only link to toplevel of each source, not subheaders? And subpages get forced scope. github.com/zim-desktop-wiki/zim-desktop-wiki

Publishing to static HTML can be done with:The output does not contain any table of contents? There is a plugin however: zim-wiki.org/manual/Plugins/Table_Of_Contents.html

zim --export Notes -o outIt is unclear if their markup is compatible with an existing language of if it was made up from scratch. Wikipedia says:

You can't determine the ordering or pages at the same level, alphabetical ordering of force. The poplevel is encoded in Feature request: github.com/zim-desktop-wiki/zim-desktop-wiki/issues/32. It's not usable as a publishing system!

notebook.zim:[Notebook]

home=HomeDoesn't seem to have image captions: superuser.com/questions/1285898/picture-description-in-zim-wiki-0-64

In the 2020's, this refers to writing down everything you know, usually in some graph structured way.

This is somewhat the centerpiece of Ciro Santilli's documentation superpowers: dumping your brain into text form, which he has been doing through Ciro Santilli's website.

This is also the closest one can get to immortality pre full blown transhumanism.

It is a good question, how much of your knowledge you would be able to give to others with text and images. It is likely almost all of it, except for coordination/signal processing tasks.

His passion for braindumping like this is a big motivation behind Ciro Santilli's OurBigBook.com work.

Bibliography:

zettelkasten.de/posts/overview/ mentions one page to rule them all:

How many Zettelkästen should I have? The answer is, most likely, only one for the duration of your life. But there are exceptions to this rule.

Lists of digital gardens:

- www.nature.com/articles/d41586-019-02209-z The four biggest challenges in brain simulation (2019)

Ciro Santilli invented this term, derived from "hardware in the loop" to refer to simulations in which both the brain and the body and physical world of organism models are modelled.

E.g. just imagine running:

Ciro Santilli invented this term, it refers to mechanisms in which you put an animal in a virtual world that the animal can control, and where you can measure the animal's outputs.

- MouseGoggles www.researchsquare.com/article/rs-3301474/v1 | twitter.com/hongyu_chang/status/1704910865583993236

- Fruit fly setup from Penn State: scitechdaily.com/secrets-of-fly-vision-for-rapid-flight-control-and-staggeringly-fast-reaction-speed/

A Drosophila melanogaster has about 135k neurons, and we only managed to reconstruct its connectome in 2023.

The human brain has 86 billion neurons, about 1 million times more. Therefore, it is obvious that we are very very far away from a full connectome.

Instead however, we could look at larger scales of connectome, and then try from that to extract modules, and then reverse engineer things module by module.

This is likely how we are going to "understand how the human brain works".

Some notable connectomes:

This is the most plausible way of obtaining a full connectome looking from 2020 forward. Then you'd observe the slices with an electron microscope + appropriate Staining. Superintelligence by Nick Bostrom (2014) really opened Ciro Santilli's eyes to this possibility.

Once this is done for a human, it will be one of the greatest milestone of humanities, coparable perhaps to the Human Genome Project. BUt of course, privacy issues are incrediby pressing in this case, even more than in the human genome project, as we would essentially be able to read the brain of the person after their death.

This is also a possible path towards post-mortem brain reading.

This is not a label that Ciro Santilli likes to give lightly. But maybe sometimes, it is inevitable.

Bibliography:

His father fought a lot with the stupid educational system to try and move his son to his full potential and move to more advanced subjects early.

A crime of society to try and prevent it. They actually moved the family from Singapore to Malaysia for a learning opportunity for the son. Amazing.

This is the perfect illustration of one of Ciro Santilli's most important complaints about the 2020 educational system:and why Ciro created OurBigBook.com to try and help fix the issue.

Bibliography:

Ainan Cawley: Child prodigy (2013)

Source. - youtu.be/Ugi74cwPMSg?t=137 finding the right school

Bibliography: cyprus-mail.com/2021/02/10/a-focus-on-maths/

Article likely written by him: www.theguardian.com/commentisfree/2010/feb/22/home-schooling-register-families

“Especially my father. He was doing most of it and he is a savoury, strong character. He has strong beliefs about the world and in himself, and he was helping me a lot, even when I was at university as an undergraduate.”An only child, Arran was born in 1995 in Glasgow, where his parents were studying at the time. His father has Spanish lineage, having a great grandfather who was a sailor who moved from Spain to St Vincent in the Carribean. A son later left the islands for the UK where he married an English woman. Arran’s mother is Norwegian.

One of the articles says his father has a PhD. TODO where did he work? What's his PhD on? Photo: www.topfoto.co.uk/asset/1357880/

www.thetimes.co.uk/article/the-everyday-genius-pxsq5c50kt9:

en.wikipedia.org/wiki/Ruth_Lawrence

When Lawrence was five, her father gave up his job so that he could educate her at home.

www.dailymail.co.uk/femail/article-3713768/Haunting-lesson-today-s-TV-child-geniuses-Ruth-Lawrence-Britain-s-famous-prodigy-tracked-father-drove-heard-troubling-tale.html

he had tried it once before - with an older daughter, Sarah, one of three children he had by a previous marriage.That experiment ended after he separated from Sarah's increasingly concerned mother, Jutta. He soon found a woman more in tune with his radical ideas in his next spouse, Sylvia Greybourne

The hard part then is how to make any predictions from it:

- 2024 www.nature.com/articles/d41586-024-02935-z Fly-brain connectome helps to make predictions about neural activity. Summary of "Connectome-constrained networks predict neural activity across the fly visual system" by J. K. Lappalainen et. al.

2024: www.nature.com/articles/d41586-024-03190-y Largest brain map ever reveals fruit fly's neurons in exquisite detail

As of 2022, it had been almost fully decoded by post mortem connectome extraction with microtome!!! 135k neurons.

- 2021 www.nytimes.com/2021/10/26/science/drosophila-fly-brain-connectome.html Why Scientists Have Spent Years Mapping This Creature’s Brain by New York Times

That article mentions the humongous paper elifesciences.org/articles/66039 elifesciences.org/articles/66039 "A connectome of the Drosophila central complex reveals network motifs suitable for flexible navigation and context-dependent action selection" by a group from Janelia Research Campus. THe paper is so large that it makes eLife hang.

Research consortium investigating the drosophila connectome.

Homepage: flywire.ai

The Neurokernel Project aims to build an open software platform for the emulation of the entire brain of the fruit fly Drosophila melanogaster on multiple Graphics Processing Units (GPUs).

Bibliography:

- www.nature.com/articles/d41586-023-02559-9 The quest to map the mouse brain by Diana Kwon (2023)

Grouping their mouse brain projcts here.

Tutorial: Allen Developing Mouse Brain by Allen Institute (2014)

Source. Ciro Santilli feels it is not for his generation though, and that is one of the philosophical things that saddens him the most in this world.

On the other hand, Ciro's playing with the Linux kernel and other complex software which no single human can every fully understand cheer him up a bit. But still, the high level view, that we can have...

For now, Ciro's 2D reinforcement learning games.

By cranks:

- www.thehighestofthemountains.com/ has some diagrams. It is unclear how they were obtained, except that they were made over the course of 5 years by a "Space Shuttle Engineer", classic crank appeal to authority. The author belives that brain function is evidence of intelligent design.

External 3D view of the Brodmann areas

. Source. The part of the cerebral cortex that is not the neocortex.

Some believe this to be a fundamental unit of the human brain.

atlas.brain-map.org/ omg some amazing things there.

www.nature.com/articles/d41586-023-02600-xThey overreached it seems.

Almost since it began, however, the HBP has drawn criticism. The project did not achieve its goal of simulating the whole human brain — an aim that many scientists regarded as far-fetched in the first place. It changed direction several times, and its scientific output became “fragmented and mosaic-like”, says HBP member Yves Frégnac

Bibliography:

Some good mentions of their dynamic duo status at The Race for the Double Helix. Their chemistry and love are palpable during their joint interviews.

Very clearly, Francis is the charismatic one, and James is the nerd.

Starting line:The Eighth Day of Creation explains the "salt" part as that was the usual way to prepare DNA for X-ray crystallography, where something binds with the phosphate groups of DNA

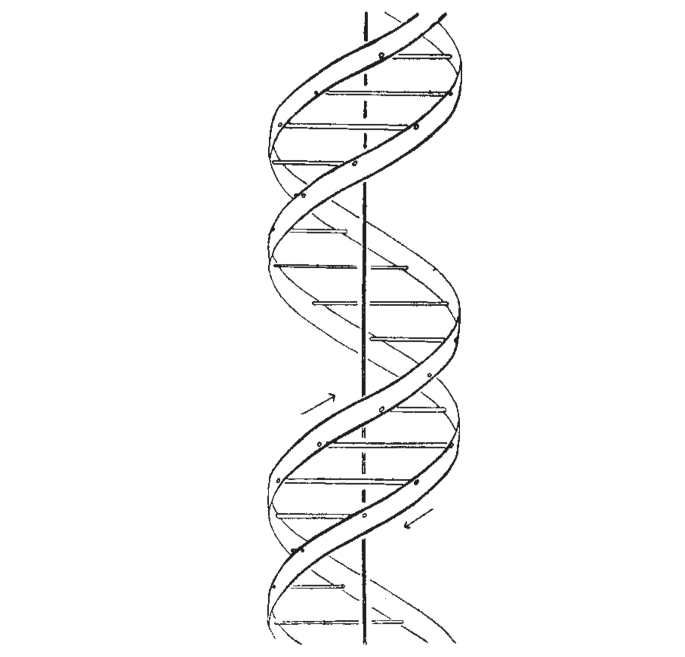

We wish to suggest a structure for the salt of deoxyribose nucleic acid (D.N.A,). This structure has novel features which are of considerable biological interest.

The paper then shoots down other previously devised helical structures, notably some containing 3 strands or phosphate on the inside.

Then they briefly describe their structure, and promise more details on future articles. This was mostly a short one-page priority note.

Then they drop their shell bomb conclusion:

It has not es~aped our notice that the specific pairing we have postulated immediately suggests a possible copying mechanism for the genetic material.

Both Wilkins and Rosalind Franklin are acknowledged at the end.

Good mentions at The man who loved numbers, notably youtu.be/PqP2c5xNaTU?t=349 where Bela Bollobas, friend of Littlewood, talks about their collaboration.

The Power of a Mother's Love by Alan Watts

. Source. Term invented by Ciro Santilli, it refers to Richard Feynman, after helping to build the atomic bomb:

This should come as no surprise, as to be come successful, you have to do something different than the masses, and often take irrational risks that make you worse off on average.

Some mentions:

- in episode 5 "The Partner" of The Playlist, an awesome 20222 Netflix series about the history of Spotify, a hypothetical Peter Thiel diagnozes Spotify co-founder Martin Lorentzon with ADHD, and mentions that a large number of rich people are neurodiverse, 30% in the tech industry as opposed to 5% in the general population

www.quora.com/How-can-I-be-as-great-as-Bill-Gates-Steve-Jobs-Elon-Musk-or-Sir-Richard-Branson/answer/Justine-Musk is a fantastic ansewr by Justine Musk, Elon Musk's ex-fife, to the question:One of her key thesis is Many successful people are neurodiverse:

How can I be as great as Bill Gates, Steve Jobs, Elon Musk or Sir Richard Branson?

These people tend to be freaks and misfits who were forced to experience the world in an unusually challenging way. They developed strategies to survive, and as they grow older they find ways to apply these strategies to other things, and create for themselves a distinct and powerful advantage. They don't think the way other people think. They see things from angles that unlock new ideas and insights. Other people consider them to be somewhat insane.

Articles were limited to the first 100 out of 212 total. Click here to view all children of Organ (anatomy).

Articles by others on the same topic

There are currently no matching articles.